Welcome to the 11‑Thousand‑Per‑Minute Era

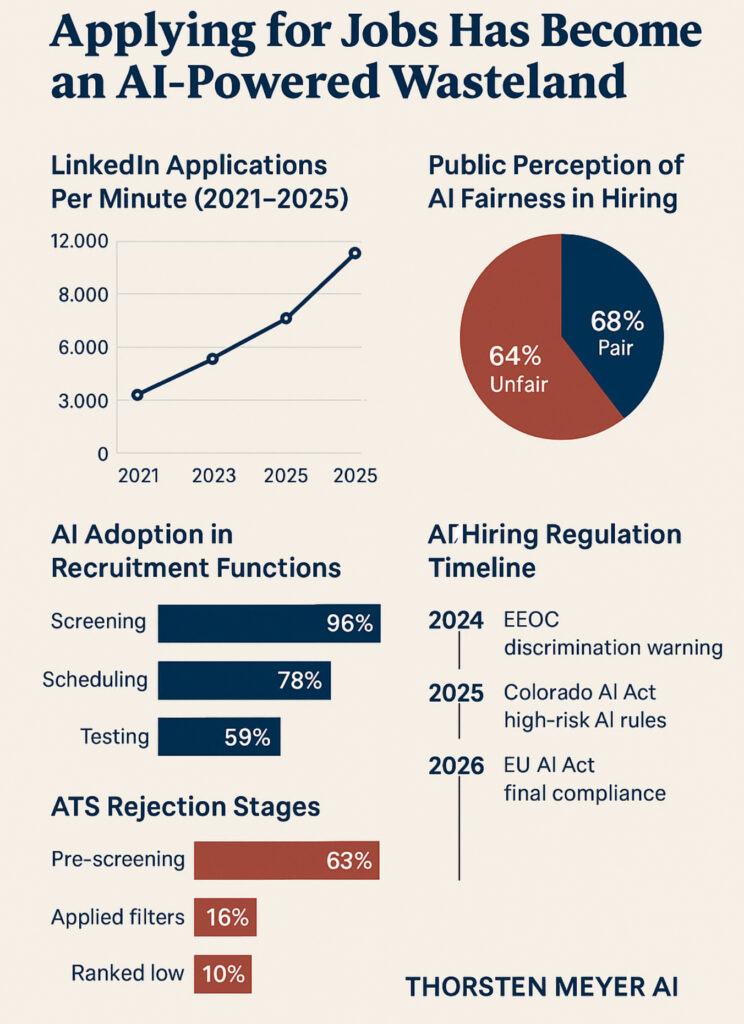

LinkedIn is now inundated with ≈11,000 applications every minute, up 45 % year‑on‑year. Recruiters describe the resulting “applicant tsunami” as an “AI‑versus‑AI” arms race in which bots write résumés and other bots screen them out.

How We Got Here: From ATS to Generative Agents

- Ubiquitous screening software. Ninety‑nine percent of Fortune 500 employers run résumés through an Applicant Tracking System (ATS); up to 70 % of CVs are discarded before any human ever looks at them.

- Volume inflation. A typical corporate posting still attracts ≥250 applicants, but AI tools make it effortless to blast out hundreds of tailored applications in seconds, swelling those numbers further.

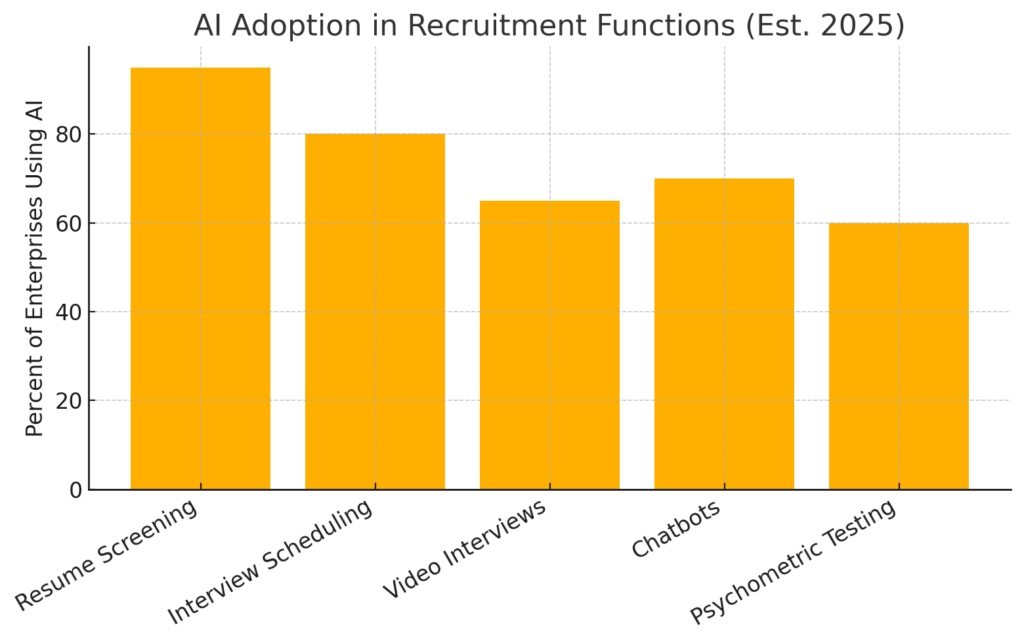

- AI on both sides. Hiring teams deploy chatbots for scheduling, psychometric games, or fully automated video interviews, while job‑seekers lean on prompt‑engineered cover letters, one‑click “loop” auto‑apply extensions and even deepfaked identities.

LinkedIn Applications Per Minute (2021–2025) – Shows the explosive growth in job application volume.

Collateral Damage for Real People

Candidates report a sense of shouting into the void:

- Jaye West sent 150 applications and was shocked when a manager actually phoned him—because every other touch‑point was a bot.

- Recruiters now fight “deepfake” applicants who mask faces or voices to slip through AI gatekeepers, forcing costly in‑person finals.

Bias, Lawsuits and the Human Cost

- Disability & accent bias. An Indigenous Deaf woman sued Intuit and HireVue after an automated speech‑analysis interview rated her “poor communicator”.

- Global evidence. Australian research shows transcription error rates jump to 22 % for some accented speakers, risking systematic rejection.

- Other cases. Workday faces collective‑action claims over alleged age discrimination; HireVue ditched facial‑analysis scoring in 2021 amid validation concerns.

Regulators Start to Push Back—but Patchily

| Jurisdiction | Key Requirement | Status |

| NYC Local Law 144 | Independent bias audit + candidate notice for any Automated Employment Decision Tool | Enforced since July 2023 |

| Colorado AI Act (SB 205) | Transparency, risk assessment & AG notification for “high‑risk” AI in hiring; compliance by Feb 2026 | Amendments failed in 2025, full regime still looming |

| EEOC Guidance (US‑wide) | Reminds employers that algorithmic scoring may violate existing discrimination law | Issued Apr 2024 |

| EU AI Act (global suppliers) | Treats hiring tools as “high‑risk”; mandates rigorous testing and disclosure | Final text adopted 2024, staggered entry into force |

Why the System Feels Broken

- Scale without judgment. Keyword filters and simplistic scoring collapse nuanced experience into binary pass/fail signals.

- Feedback vacuum. Algorithms rarely explain rejections, so applicants cannot improve.

- Arms‑race escalation. Each new filter invites new evasion tools, inflating noise for recruiters and genuine candidates alike.

- Hidden bias. Training data—often U.S.‑centric, male and native‑speaker dominated—hard‑codes historical inequities.

What Employers Can Do Now

- Audit the funnel. Run disparate‑impact tests on every screening stage, not just the final interview.

- Keep a human in the loop. Require manual review for any applicant who meets posted minimums, even if ranked low by AI.

- Offer transparency. Post the name of any ATS or assessment vendor and a plain‑language explanation of how scores are generated.

- Provide actionable feedback. A short note on unmet requirements reduces applicant churn and reputational damage.

What Job‑Seekers Can Do

- Design for parsers. Use clear headings, linear chronology, and the exact keywords from the posting—then check with an ATS simulator.

- Quality over quantity. Auto‑apply bots rarely beat a tightly tailored submission plus a personal referral.

- Document interactions. Save vacancy snapshots and AI‑generated communications in case you later need to contest a decision.

- Know your rights. In NYC you can request the bias‑audit summary; in Colorado you’ll soon receive mandatory AI disclosures.

Where We’re Headed

Unless regulation and practice converge on explainability, limited automation and meaningful human oversight, hiring risks becoming an opaque contest of algorithmic one‑upmanship that serves neither businesses nor talent. The tools are not leaving; the challenge is restoring trust and fairness before applicants—and employers—abandon the system entirely.