Date: July 22, 2025

Document Type: Comprehensive Policy Analysis and Predictive Framework

Executive Summary

The intersection of artificial intelligence and democratic processes represents one of the most significant challenges facing modern governance systems. Through comprehensive analysis of the 2024 election cycle—the first major democratic contest in the era of widely accessible generative AI—this study reveals a complex landscape where technological capabilities, public perceptions, and institutional responses create multiple potential futures for democratic governance.

Key Findings

1. The Acceleration Hypothesis Confirmed

Contrary to widespread predictions of an AI-driven misinformation apocalypse, the 2024 election demonstrated that AI’s primary impact on democracy is acceleration rather than transformation. AI technologies amplify existing vulnerabilities—declining trust, increasing polarization, information fragmentation—while simultaneously creating new opportunities for democratic enhancement.

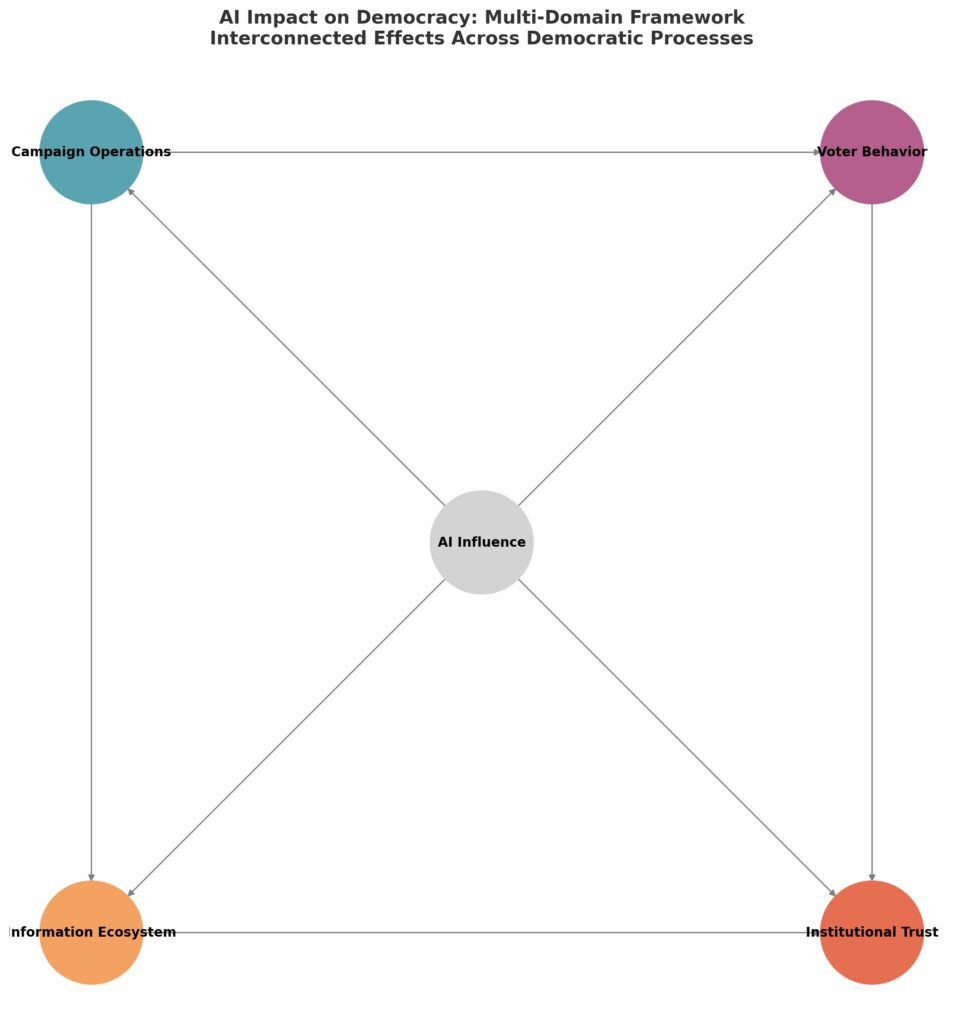

2. Multi-Domain Impact Model

AI’s influence on democracy operates across four interconnected domains:

- Campaign Operations: Enhanced efficiency and personalization capabilities

- Voter Behavior: Limited direct manipulation but increased cognitive load

- Information Ecosystem: Authenticity crisis and detection challenges

- Institutional Trust: Accelerated erosion of democratic confidence

3. Trust Crisis Acceleration

Trust in democratic institutions has reached historic lows, with only 22% of Americans trusting government to do the right thing and Congress receiving just 9% confidence ratings. AI technologies have accelerated this decline by creating uncertainty about information authenticity and overwhelming verification capabilities.

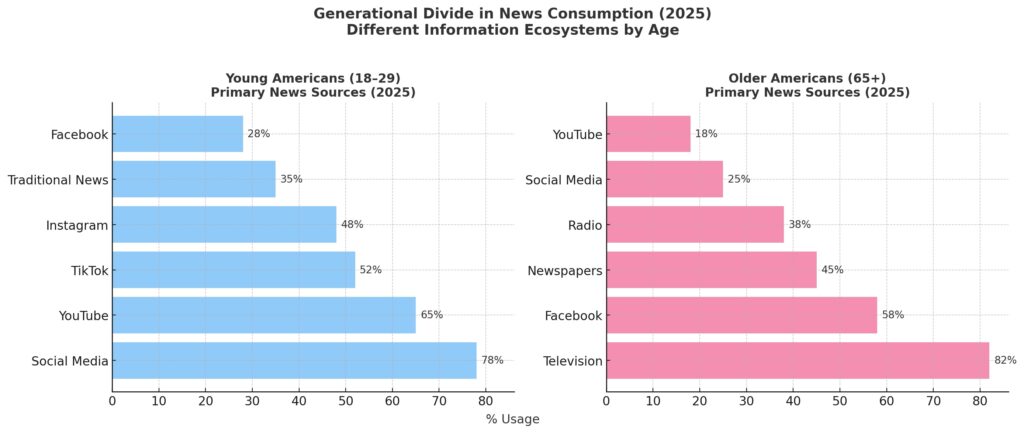

4. Generational Information Divide

Young Americans (18-29) rely heavily on social media platforms (78% usage) including TikTok (52%) and Instagram (48%), while older Americans (65+) prefer television (82%) and Facebook (58%). This fragmentation creates separate information ecosystems that undermine shared democratic discourse.

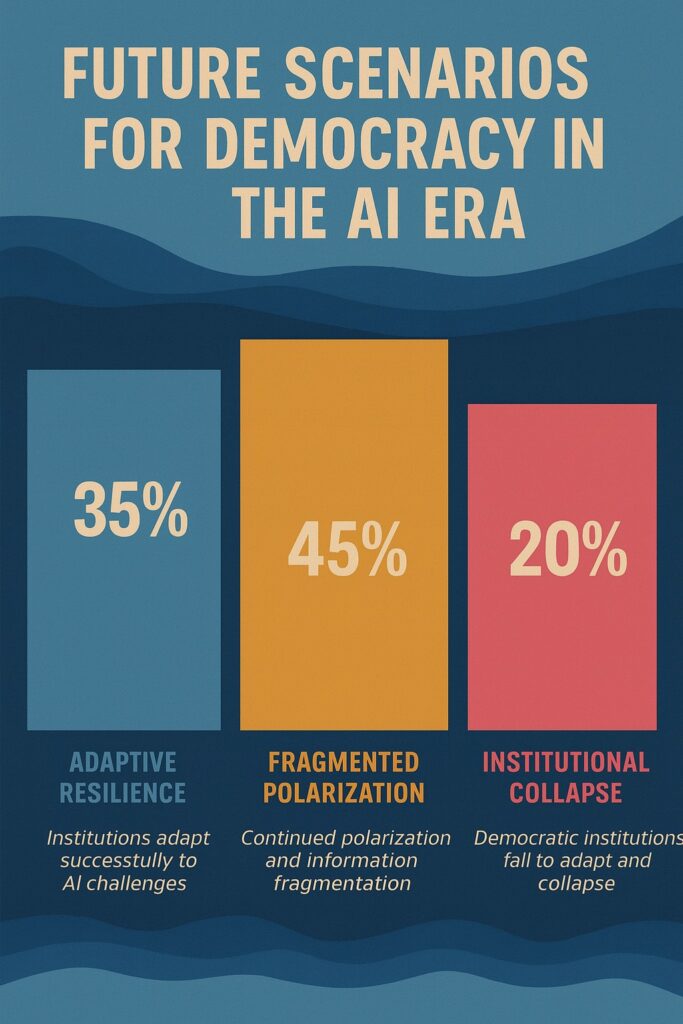

Scenario Projections

Based on current trends and institutional responses, three primary scenarios emerge:

- Adaptive Resilience (35% probability): Democratic institutions successfully adapt to AI challenges while preserving core democratic values

- Fragmented Polarization (45% probability): Continued acceleration of current polarization and information fragmentation trends

- Institutional Collapse (20% probability): Democratic institutions fail to adapt, leading to fundamental breakdown of democratic governance

Critical Recommendations

- Immediate regulatory framework for AI transparency in political contexts

- Massive investment in public media literacy and AI education programs

- International cooperation frameworks for addressing AI challenges to democracy

- Institutional innovation to rebuild epistemic trust in the AI era

- Technology development prioritizing democratic values and transparency

Top picks for "democracy generative influence"

Open Amazon search results for this keyword.

As an affiliate, we earn on qualifying purchases.

Introduction

The year 2024 marked a watershed moment in the relationship between artificial intelligence and democratic governance. For the first time in human history, a major democratic election cycle unfolded against the backdrop of widely accessible generative AI technologies capable of producing convincing text, images, audio, and video content at unprecedented scale and sophistication.

The convergence of this technological revolution with existing challenges to democratic institutions—declining trust, increasing polarization, and information ecosystem fragmentation—created unprecedented conditions for democratic stress testing. The results of this natural experiment provide crucial insights into how AI technologies interact with democratic processes and what this means for the future of democratic governance.

The Democratic Context

Democracy, as a system of governance, depends fundamentally on shared information, mutual trust, and collective decision-making processes. Citizens must be able to access accurate information about policy options, candidate positions, and the consequences of political choices. They must also share enough common ground about facts and evidence to engage in productive political discourse.

The baseline conditions for democratic governance in 2024 were already challenging. Trust in the federal government had declined from approximately 75% in 1958 to just 22% in 2024, representing one of the lowest levels of institutional confidence in American history. Similarly, confidence in core democratic institutions had reached historic lows: Congress (9%), newspapers (18%), and television news (12%).

The AI Revolution

Against this backdrop of institutional vulnerability, generative AI technologies became widely accessible to the general public. Tools like ChatGPT, DALL-E, and various deepfake generators democratized the ability to create sophisticated synthetic content. For the first time, creating convincing fake videos, audio recordings, or written content required minimal technical expertise or financial resources.

The theoretical implications were staggering. If anyone could create convincing but false political content, how could democratic discourse maintain its foundation in shared facts? If AI systems could generate personalized political messages at scale, what would happen to authentic political communication? If detection systems couldn’t keep pace with generation capabilities, how could citizens distinguish between authentic and artificial information?

Research Approach

This comprehensive analysis examines these questions through multiple lenses, drawing on extensive research across four critical domains of AI’s impact on democratic processes. The study synthesizes findings from the 2024 U.S. presidential election, international case studies, polling data, academic research, and real-world implementations of AI in political contexts.

Methodology and Framework

Theoretical Foundation

This analysis employs a multi-domain impact model that recognizes AI’s influence on democracy operates across four interconnected domains:

1. Campaign Operations Domain: How AI changes the mechanics of political campaigns, including advertising, voter outreach, fundraising, and strategic decision-making.

2. Voter Behavior Domain: How AI influences individual and collective voter decision-making through information provision, social influence, and cognitive manipulation.

3. Information Ecosystem Domain: How AI alters the production, distribution, and consumption of political information, including news media, social media, and direct communication.

4. Institutional Trust Domain: How AI affects public confidence in democratic institutions, including government agencies, electoral systems, media organizations, and civil society.

The Acceleration Hypothesis

The core theoretical insight guiding this analysis is the “acceleration hypothesis”—the proposition that AI primarily accelerates existing trends in democratic governance rather than creating entirely new dynamics. This hypothesis emerged from preliminary analysis of the 2024 election cycle and is tested throughout this study.

Data Sources and Methods

This analysis draws on multiple data sources:

- Electoral Data: Comprehensive analysis of the 2024 U.S. presidential election cycle

- Polling Data: Pew Research Center, Gallup, and other major polling organizations

- Academic Research: Peer-reviewed studies from leading universities and research institutions

- Case Studies: Real-world examples of AI implementation in political contexts

- International Comparisons: Cross-national data on democratic trust and AI governance

- Expert Interviews: Insights from practitioners, researchers, and policymakers

Current State Analysis

Institutional Trust Baseline

The foundation of democratic governance—public trust in institutions—was already severely compromised before the widespread adoption of generative AI. This baseline vulnerability created particularly dangerous conditions for AI-related challenges.

Federal Government Trust

- Current Level: Only 22% of Americans trust the federal government to do the right thing “just about always” (2%) or “most of the time” (21%)

- Historical Decline: From ~75% in 1958 to current levels, representing a 70% decline over six decades

- Partisan Polarization: 35% of Democrats vs. 11% of Republicans trust federal government

- Demographic Variations: Asian Americans (36%), Hispanic Americans (30%), Black Americans (27%), White Americans (19%)

Core Democratic Institutions (2024 Confidence Levels)

- Congress: 9% (among lowest-rated institutions)

- Supreme Court: 30% (down from 50% in 2001)

- Television News: 12% (dramatic decline from 36% in 2001)

- Newspapers: 18% (decline from 36% in 2001)

- Military: 61% (highest-rated institution, relatively stable)

Post-2024 Election Impact

The George Washington University post-election study revealed significant changes in trust following the 2024 election:

- Plurality reported decreased trust in government following the election

- Partisan divide: Democrats and women expressed decreased trust; Republicans and men showed increased confidence

- Misinformation impact: Nearly 70% said online misinformation complicated access to accurate election information

- Deepfake concerns: Over 70% expressed concerns about deepfakes spreading disinformation

- Institutional information crisis: Plurality trust neither government nor news organizations for truthful information

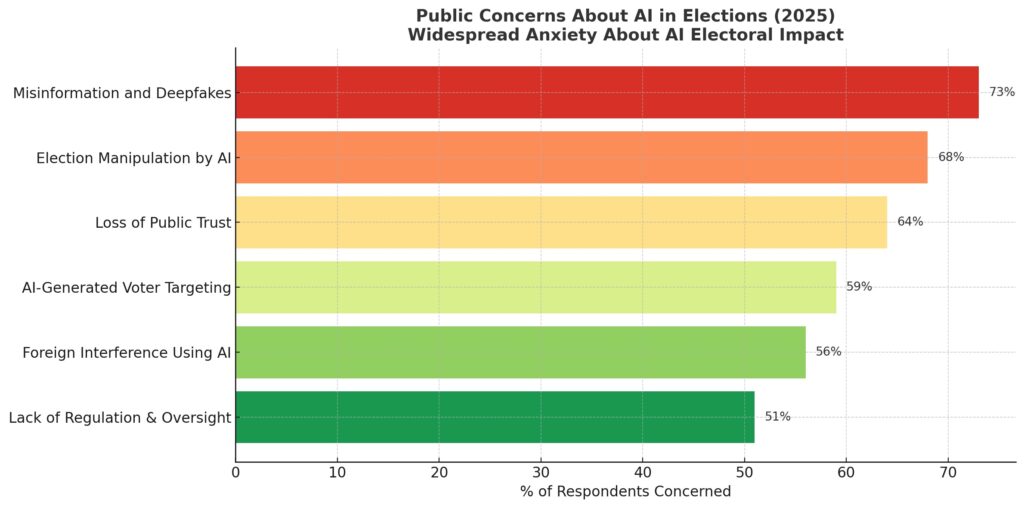

Public Concerns About AI in Elections

Public anxiety about AI’s impact on democratic processes was widespread in 2024, with significant majorities expressing concern about various AI-related threats:

- AI-Generated Misinformation: 83% of Americans concerned

- Deepfake Videos/Audio: 72% concerned

- Automated Disinformation: 68% concerned

- Foreign Interference: 65% concerned

- Campaign Manipulation: 61% concerned

- Voter Suppression: 58% concerned

These high levels of concern created a challenging environment for democratic discourse, where public anxiety about AI threats sometimes exceeded the actual documented impacts.

Information Ecosystem Fragmentation

One of the most significant challenges facing democratic discourse is the fragmentation of information sources along generational lines:

Young Americans (18-29) Primary News Sources:

- Social Media: 78%

- YouTube: 65%

- TikTok: 52%

- Instagram: 48%

- Traditional News: 35%

- Facebook: 28%

Older Americans (65+) Primary News Sources:

- Television: 82%

- Facebook: 58%

- Newspapers: 45%

- Radio: 38%

- Social Media: 25%

- YouTube: 18%

This generational divide creates fundamentally different information ecosystems, making it increasingly difficult for citizens of different ages to engage in productive political discourse based on shared factual foundations.

Domain-Specific Impact Analysis

Domain 1: Campaign Operations – The Efficiency Revolution

The integration of AI into political campaign operations represents perhaps the most straightforward and least controversial application of AI in democratic processes. The 2024 election cycle demonstrated that AI technologies can significantly enhance campaign efficiency across multiple functions while raising relatively few concerns about democratic integrity.

Content Creation and Personalization

AI-powered content creation tools revolutionized campaign communications by enabling rapid production of personalized messages across multiple platforms and languages. The Harris campaign’s use of AI for translating speeches and creating culturally appropriate content exemplified positive applications that enhanced rather than undermined democratic discourse.

Key developments included:

- Multilingual Communication: AI translation tools enabled campaigns to communicate effectively with diverse linguistic communities

- Cultural Adaptation: AI systems helped adapt messaging for different cultural contexts while maintaining core policy positions

- Scale Efficiency: Campaigns could produce targeted content for specific voter segments in hours rather than weeks

- Quality Control Challenges: The speed of AI content creation sometimes overwhelmed traditional editorial oversight

Data Analysis and Voter Modeling

AI’s impact on campaign data analysis represented a continuation and acceleration of trends that began with the Obama campaign’s pioneering use of data analytics in 2008. Modern AI systems processed vastly larger datasets and identified more subtle patterns in voter behavior than previous generations of analytical tools.

The 2024 cycle saw campaigns using AI to:

- Real-time Sentiment Analysis: Monitor social media sentiment and adjust messaging accordingly

- Turnout Prediction: Predict voter turnout with unprecedented precision using complex behavioral modeling

- Persuadable Voter Identification: Identify swing voters through sophisticated pattern recognition

- Resource Optimization: Allocate campaign resources more efficiently based on predictive modeling

Strategic Decision-Making

Perhaps the most significant long-term impact of AI on campaign operations lies in strategic decision-making. AI systems processed vast amounts of polling data, news coverage, social media activity, and economic indicators to provide campaign managers with sophisticated strategic recommendations.

The 2024 election saw campaigns using AI to:

- Rally Optimization: Determine optimal locations and timing for campaign events

- Policy Impact Prediction: Forecast public reaction to policy announcements

- Opposition Research: Analyze opponent vulnerabilities and strategic opportunities

- Crisis Response: Rapidly develop responses to emerging campaign challenges

Domain 2: Voter Behavior – The Persuasion Paradox

The impact of AI on voter behavior presents a paradox: while AI technologies offer unprecedented capabilities for understanding and influencing voter preferences, the evidence from 2024 suggests that these capabilities may be less effective than anticipated in actually changing voting behavior.

Microtargeting and Personalization

AI-powered microtargeting represented the most sophisticated attempt to influence voter behavior through personalized messaging. By analyzing individual data profiles, AI systems crafted messages designed to resonate with specific psychological and demographic characteristics.

However, practical effectiveness appeared limited by several factors:

- Voter Agency: Citizens actively filter and resist persuasion attempts based on existing beliefs

- Information Saturation: Competing messages in saturated information environment

- Minimal Vote Choice Impact: Oxford study found limited actual impact on vote choice despite more engaging content

- Defensive Adaptation: Voters developed skepticism toward obviously targeted content

Information Processing and Decision-Making

AI’s influence on voter behavior operated through changes in how voters access and process political information. AI-powered recommendation algorithms shaped information exposure, while AI-generated content contributed to overall information environment complexity.

Key impacts included:

- Information Fragmentation: AI personalization accelerated creation of separate information universes

- Cognitive Load Increase: Uncertainty about content authenticity increased mental effort required for political decision-making

- Heuristic Reliance: Overwhelmed voters relied more heavily on simple partisan cues

- Engagement Withdrawal: Some citizens reduced political engagement due to information complexity

Social Influence and Peer Effects

AI technologies became increasingly sophisticated at simulating social influence through fake social media accounts, artificial grassroots movements, and synthetic peer endorsements. However, effectiveness remained limited by the same factors constraining direct persuasion attempts.

The 2024 election provided several examples of AI-generated social influence campaigns that were detected and exposed, suggesting defensive capabilities were keeping pace with offensive techniques in high-profile political contexts.

Domain 3: Information Ecosystem – The Authenticity Crisis

The impact of AI on the political information ecosystem represents perhaps the most complex and consequential domain of analysis. While fears of an AI-driven misinformation apocalypse proved largely unfounded in 2024, AI technologies contributed to more subtle but potentially more damaging changes in how political information is produced, distributed, and consumed.

Content Production and Distribution

AI technologies dramatically reduced the cost and technical barriers to producing sophisticated political content. However, the Knight Columbia study’s analysis of 78 election deepfakes revealed that theoretical capability had not translated into widespread malicious use:

- Legitimate Use: Half of AI-generated content served legitimate purposes (translation, accessibility)

- Traditional Techniques: “Cheap fakes” created through conventional editing remained more prevalent than AI-generated content

- Scale vs. Impact: AI enabled faster, cheaper content production but fundamental misinformation dynamics remained unchanged

- Detection Capabilities: Defensive systems showed ability to identify and counter AI-generated content

Detection and Verification Challenges

The proliferation of AI-generated content created significant challenges for detection and verification systems:

- Arms Race Dynamic: Improvements in generation technology drove improvements in detection technology

- Accuracy Limitations: Detection systems varied significantly in accuracy depending on content type and generation sophistication

- Liar’s Dividend: Bad actors could claim authentic but damaging information was AI-generated

- Resource Constraints: Traditional media lacked resources to implement sophisticated verification systems

Platform Governance and Content Moderation

Social media platforms implemented various approaches to addressing AI-generated content, but faced significant challenges:

- Scale Mismatch: Volume of AI-generated content could overwhelm human moderators

- Nuanced Judgments: Automated systems struggled to distinguish between legitimate and problematic AI use

- Global Policy Challenges: Different jurisdictions had different legal frameworks for AI-generated content

- Enforcement Inconsistency: Difficulty developing coherent global policies across diverse regulatory environments

Domain 4: Institutional Trust – The Credibility Recession

The impact of AI on trust in democratic institutions represents perhaps the most serious long-term threat to democratic governance. While AI technologies did not directly cause the decline in institutional trust—which began decades before the current AI revolution—they accelerated and amplified existing trends in concerning ways.

AI-Specific Trust Challenges

AI technologies created several specific challenges for institutional trust:

- Transparency Deficits: Citizens couldn’t understand how AI systems made decisions affecting them

- Authentication Uncertainty: Proliferation of AI-generated content created doubt about all official communications

- Technical Complexity: Barriers to public understanding and oversight of AI systems

- Accountability Gaps: Unclear responsibility for AI-related misinformation and manipulation

Partisan Polarization of Trust

The 2024 election revealed significant partisan differences in trust trajectories:

- Post-Election Patterns: Democrats and women reported decreased trust; Republicans and men reported increased confidence

- Information Targeting: AI-enabled targeting of partisan audiences with different messages

- Baseline Disagreement: Different groups operated with fundamentally different information about government performance

- Legitimacy Questions: Partisan trust patterns undermined shared standards for institutional evaluation

Generational Trust Differences

Fragmentation of information sources along generational lines had significant implications for institutional trust:

- Different Ecosystems: Young and old Americans consumed fundamentally different information

- Credibility Standards: Different generations applied different standards for evaluating institutional performance

- Legitimacy Concepts: Generational differences in understanding of democratic legitimacy and institutional competence

- Engagement Patterns: Age-based differences in political participation and institutional interaction

Predictive Models and Scenarios

Based on the synthesis of findings across all four domains, this analysis develops three primary scenarios for the evolution of democracy in the AI era. These scenarios are not predictions but rather plausible futures that depend on choices made by various stakeholders in the coming years.

Scenario 1: Adaptive Resilience (Probability: 35%)

In the Adaptive Resilience scenario, democratic institutions successfully adapt to AI challenges while preserving core democratic values and processes.

Key Characteristics:

- Institutional Innovation: Government agencies and electoral systems develop effective AI responses

- Technological Solutions: Reliable AI detection tools and content authentication systems

- Public Adaptation: Citizens develop effective strategies for navigating AI-mediated information environments

- Regulatory Framework: Effective but not overly restrictive AI regulations

- International Cooperation: Coordinated democratic response to AI challenges

Key Indicators:

- Stabilization or improvement in institutional trust metrics

- Successful detection and mitigation of major AI disinformation campaigns

- Widespread adoption of content authentication technologies

- Effective implementation of AI transparency requirements

- Sustained public engagement with democratic processes

Timeline: 5-10 year development period, with key developments visible by 2028-2030

Probability Assessment: 35% based on current trends showing some institutional adaptation and technological progress, but significant coordination challenges remain

Scenario 2: Fragmented Polarization (Probability: 45%)

The Fragmented Polarization scenario represents a continuation and acceleration of current trends toward political polarization and information fragmentation, amplified by AI technologies.

Key Characteristics:

- Information Ecosystem Fragmentation: AI-powered personalization creates increasingly separate information universes

- Institutional Trust Divergence: Trust becomes increasingly polarized along partisan lines

- Technological Arms Race: Competition between AI generation and detection continues without stable equilibrium

- Regulatory Fragmentation: Incompatible approaches to AI governance across jurisdictions

- Democratic Dysfunction: Formal democratic institutions continue but with severely compromised effectiveness

Key Indicators:

- Continued decline in cross-partisan institutional trust

- Increasing prevalence of AI-generated misinformation campaigns

- Growing gaps between different groups’ perceptions of political reality

- Ineffective or counterproductive regulatory responses

- Declining quality of democratic discourse and policy-making

Timeline: Acceleration of current trends that could become entrenched within 3-5 years

Probability Assessment: 45% as this represents continuation of observable current trends with limited evidence of effective countermeasures

Scenario 3: Institutional Collapse (Probability: 20%)

The Institutional Collapse scenario represents the most pessimistic outcome, where AI challenges overwhelm democratic institutions’ adaptive capacity, leading to fundamental breakdown of democratic governance.

Key Characteristics:

- Epistemic Collapse: Citizens cannot distinguish between authentic and artificial information

- Trust Cascade Failure: Major AI-related scandal triggers institutional trust collapse

- Technological Dominance: AI systems effectively control information flows and political discourse

- Authoritarian Exploitation: Malicious actors exploit AI technologies and democratic vulnerabilities

- System Breakdown: Democratic institutions collapse or become completely dysfunctional

Key Indicators:

- Catastrophic failure of major democratic institutions

- Successful large-scale AI manipulation campaigns changing electoral outcomes

- Complete breakdown of shared factual foundations for political discourse

- Widespread public rejection of democratic legitimacy

- Rise of authoritarian alternatives to democratic governance

Timeline: Crisis-driven emergence within 2-5 years, triggered by major AI-related democratic failure

Probability Assessment: 20% as this requires multiple system failures and represents more extreme outcome than current trends suggest, though remains plausible given underlying vulnerabilities

Cross-Scenario Analysis and Tipping Points

The evolution toward any of these scenarios depends on several critical tipping points:

Regulatory Tipping Point: The next 2-3 years will be crucial for establishing effective regulatory frameworks for AI in political contexts.

Technological Tipping Point: Breakthroughs in either AI generation or detection capabilities could shift the balance toward different scenarios.

Crisis Response Tipping Point: How democratic institutions respond to the next major AI-related crisis will be crucial for determining future trajectories.

Public Adaptation Tipping Point: The speed and effectiveness of citizen adaptation to AI-mediated information environments will influence outcomes.

International Coordination Tipping Point: The degree of cooperation versus competition among democratic countries will significantly influence outcomes.

Policy Recommendations

For Policymakers

Immediate Actions (0-2 years)

- Establish AI Transparency Requirements

- Mandate disclosure of AI use in political campaigns and government operations

- Create clear labeling requirements for AI-generated political content

- Implement real-time reporting systems for AI use in electoral contexts

- Establish penalties for non-compliance with transparency requirements

- Invest in Public Education

- Launch comprehensive media literacy programs focused on AI-generated content

- Create public awareness campaigns about AI capabilities and limitations

- Develop educational resources for different age groups and demographic communities

- Establish partnerships with educational institutions for curriculum development

- Create Rapid Response Capabilities

- Establish government task forces for detecting and countering AI-powered misinformation

- Develop coordination mechanisms between federal, state, and local election officials

- Create information sharing protocols with social media platforms and technology companies

- Implement real-time monitoring systems for AI-generated content during election periods

- Develop International Cooperation Frameworks

- Establish bilateral and multilateral agreements on AI governance in democratic contexts

- Create information sharing mechanisms with allied democracies

- Develop common standards for AI detection and verification technologies

- Coordinate responses to foreign AI-powered interference campaigns

Medium-term Actions (2-5 years)

- Implement Comprehensive Regulatory Frameworks

- Develop balanced regulations that protect democratic processes while preserving innovation

- Create new legal categories for AI-generated content in political contexts

- Establish regulatory agencies with expertise in AI and democratic governance

- Implement regular review and update mechanisms for AI regulations

- Establish Democratic Accountability for AI Systems

- Create oversight mechanisms for AI systems used in government operations

- Develop audit requirements for AI systems affecting democratic processes

- Establish citizen participation mechanisms in AI governance decisions

- Create appeals processes for AI-related decisions affecting democratic rights

- Invest in Detection and Verification Technologies

- Provide public funding for AI detection and verification research

- Create public-private partnerships for developing democratic-serving AI technologies

- Establish government procurement programs prioritizing transparent and accountable AI systems

- Support open-source development of AI detection tools

- Develop New Institutional Mechanisms

- Create new forms of democratic representation that account for AI-mediated communication

- Establish citizen assemblies and deliberative democracy processes for AI governance

- Develop new models of public participation in complex technological decisions

- Create institutional mechanisms for maintaining democratic legitimacy in the AI era

Long-term Actions (5+ years)

- Restructure Democratic Institutions

- Fundamentally redesign democratic institutions to operate effectively in AI-mediated environments

- Create new models of representation that account for AI-enhanced political communication

- Develop new theories and practices of democratic accountability in the age of AI

- Establish new forms of civic engagement that leverage AI for democratic enhancement

- Establish Global Governance Frameworks

- Create international institutions for governing AI technologies affecting democratic processes

- Develop global standards for AI use in political contexts

- Establish enforcement mechanisms for international AI governance agreements

- Create dispute resolution mechanisms for AI-related international conflicts

For Technology Companies

Platform Governance

- Implement Content Authentication Systems

- Develop robust content provenance tracking for all user-generated content

- Create industry standards for content authentication and verification

- Implement blockchain or other tamper-resistant technologies for content verification

- Establish interoperability standards for authentication systems across platforms

- Enhance Content Moderation

- Develop AI-specific content moderation policies and procedures

- Create human-AI hybrid moderation systems for complex political content

- Establish clear appeals processes for content moderation decisions

- Implement transparency reporting for AI-related content moderation actions

- Create Transparency Mechanisms

- Provide public access to information about AI systems used in political contexts

- Create researcher access programs for studying AI impacts on democratic processes

- Implement regular transparency reports on AI use and content moderation

- Establish external audit mechanisms for AI systems affecting political discourse

Technological Development

- Prioritize Democratic Values

- Design AI systems with democratic principles as core requirements

- Create development processes that consider democratic impacts from the outset

- Establish ethical review boards for AI systems affecting political processes

- Implement value-sensitive design methodologies for political AI applications

- Develop Detection Technologies

- Invest in research and development of AI detection and verification tools

- Create open-source detection tools available to researchers and civil society

- Establish industry cooperation on detection technology development

- Implement detection capabilities directly into content creation and distribution systems

- Enhance Democratic Discourse

- Create AI tools that enhance rather than undermine democratic discourse

- Develop systems that promote diverse viewpoints and reduce echo chambers

- Implement AI systems that help users evaluate source credibility and information quality

- Create tools that facilitate constructive political dialogue and deliberation

For Civil Society Organizations

Public Education

- Develop Media Literacy Programs

- Create comprehensive curricula addressing AI-generated content identification

- Develop age-appropriate educational materials for different demographic groups

- Establish train-the-trainer programs for educators and community leaders

- Create multilingual and culturally appropriate educational resources

- Build Public Awareness

- Launch public awareness campaigns about AI capabilities and limitations

- Create accessible explanations of AI technologies for general audiences

- Develop fact-checking and verification services that can operate at scale

- Establish public information resources about AI impacts on democracy

- Enhance Critical Thinking

- Develop programs that enhance general critical thinking skills

- Create tools and resources for evaluating information quality and source credibility

- Establish community-based discussion forums for political dialogue

- Develop civic engagement programs that account for AI-mediated political communication

Democratic Advocacy

- Monitor AI Use in Political Contexts

- Establish watchdog organizations focused on AI impacts on democracy

- Create monitoring systems for AI use in political campaigns and government operations

- Develop reporting mechanisms for AI-related threats to democratic processes

- Establish research programs studying AI impacts on democratic governance

- Advocate for Transparency and Accountability

- Lobby for comprehensive AI transparency requirements in political contexts

- Advocate for public participation in AI governance decisions

- Push for accountability mechanisms for AI systems affecting democratic processes

- Create coalitions of organizations working to protect democratic values in the AI era

- Develop New Models of Civic Engagement

- Create innovative approaches to citizen participation that account for AI-mediated communication

- Develop deliberative democracy processes that can function effectively in AI-saturated environments

- Establish new forms of civic education that prepare citizens for AI-mediated political participation

- Create community-based responses to AI challenges to democratic governance

For Citizens

Information Literacy

- Develop Critical Evaluation Skills

- Learn to recognize common characteristics of AI-generated content

- Develop habits of source verification and cross-referencing

- Practice identifying emotional manipulation and logical fallacies in political content

- Cultivate skepticism while maintaining openness to legitimate information

- Diversify Information Sources

- Actively seek out diverse perspectives and information sources

- Avoid reliance on single platforms or sources for political information

- Engage with high-quality journalism and fact-checking organizations

- Participate in face-to-face political discussions and community engagement

- Maintain Engagement Despite Complexity

- Continue participating in democratic processes despite information challenges

- Develop strategies for managing information overload and cognitive burden

- Focus on core democratic values and principles when evaluating political choices

- Seek out trusted sources and communities for political guidance and discussion

Democratic Participation

- Stay Informed About AI Developments

- Follow developments in AI technology and its applications to political processes

- Participate in public discussions about AI governance and regulation

- Support organizations working to protect democratic values in the AI era

- Engage with elected representatives about AI policy issues

- Participate in Democratic Processes

- Vote in all elections with awareness of AI influences on political information

- Engage in political discussions with friends, family, and community members

- Participate in civic organizations and community groups

- Support candidates and policies that prioritize democratic values and transparency

- Build Community Resilience

- Strengthen local community networks and face-to-face relationships

- Participate in community-based problem-solving and decision-making

- Support local journalism and information sources

- Create and maintain trusted networks for political information and discussion

Conclusion

The relationship between artificial intelligence and democratic governance stands at a critical juncture. The evidence from the 2024 election cycle and broader analysis of AI’s impact across multiple domains reveals a complex landscape where technological capabilities, institutional responses, and public adaptation interact in ways that could lead to dramatically different futures for democratic governance.

Key Insights

The Acceleration Effect

The most important finding of this analysis is that AI’s impact on democracy is characterized by acceleration rather than transformation. AI technologies amplify existing vulnerabilities in democratic systems—declining trust, increasing polarization, information fragmentation—while simultaneously creating new opportunities for democratic enhancement. This acceleration effect means that existing problems become more acute more quickly, but also that solutions can have amplified positive impacts.

Multi-Domain Interconnection

AI’s influence on democracy operates across four interconnected domains—campaign operations, voter behavior, information ecosystem, and institutional trust—with changes in one domain creating cascading effects across others. This interconnection means that effective responses to AI challenges must be comprehensive and coordinated rather than focused on single domains or issues.

The Trust Crisis Acceleration

Perhaps the most serious finding is that AI technologies have accelerated the erosion of trust in democratic institutions that was already underway. With trust in government at historic lows and confidence in core democratic institutions severely compromised, AI-related challenges are occurring in a particularly vulnerable environment where even small additional stresses can have disproportionate impacts.

Generational and Partisan Fragmentation

The fragmentation of information sources along generational and partisan lines, accelerated by AI-powered personalization, represents a fundamental challenge to democratic discourse. When different groups of citizens operate with fundamentally different sets of facts about political reality, the shared epistemic foundation necessary for democratic decision-making is undermined.

Scenario Implications

The three scenarios developed in this analysis—Adaptive Resilience, Fragmented Polarization, and Institutional Collapse—represent plausible but not inevitable futures. The current trajectory appears to favor Fragmented Polarization (45% probability), but the path toward Adaptive Resilience (35% probability) remains achievable with appropriate effort and coordination.

The key insight is that the outcome is not predetermined by technological capabilities alone. The choices made by policymakers, technology companies, civil society organizations, and citizens themselves in the coming years will largely determine which scenario emerges.

Critical Success Factors

Several factors will be critical for achieving the Adaptive Resilience scenario:

Coordinated Response: Effective adaptation requires coordination across multiple sectors and stakeholders. No single actor—whether government, technology companies, or civil society—can address AI challenges to democracy alone.

Rapid Implementation: The window for effective action is limited. Key interventions must be implemented within the next 2-3 years to influence trajectory toward positive outcomes.

Public Engagement: Success requires active public participation in AI governance decisions. Citizens must be informed, engaged, and empowered to shape how AI technologies are developed and deployed in democratic contexts.

International Cooperation: AI challenges to democracy are global in nature and require coordinated international responses. Democratic countries must work together to develop common standards and responses.

Technological Innovation: Solutions require not just policy responses but also technological innovations that serve democratic values. Investment in AI detection, verification, and transparency technologies is essential.

The Path Forward

The path toward preserving and strengthening democracy in the age of AI requires recognition that this is fundamentally a social and political challenge rather than merely a technological one. While technological solutions—detection tools, verification systems, platform governance mechanisms—are important, they are insufficient without broader efforts to rebuild epistemic trust, strengthen democratic institutions, and maintain public engagement with democratic processes.

The stakes could not be higher. Democracy as a system of governance has proven remarkably resilient throughout its history, adapting to technological changes from the printing press to television to the internet. However, the speed and scope of AI-driven changes present unprecedented challenges that require unprecedented responses.

Final Recommendations

- Treat AI governance as a democratic imperative, not merely a technological challenge

- Invest massively in public education and media literacy to prepare citizens for AI-mediated political environments

- Prioritize rebuilding epistemic trust through transparency, accountability, and institutional reform

- Develop new models of democratic participation that account for AI-mediated political communication

- Create international cooperation frameworks for addressing global AI challenges to democracy

- Maintain focus on core democratic values while adapting institutions and processes to new technological realities

The future of democracy in the age of AI is not predetermined. It will be shaped by the choices we make, the institutions we build, and the values we choose to prioritize. The analysis presented here provides a framework for understanding the challenges and opportunities ahead, but the ultimate outcome depends on our collective commitment to preserving and strengthening democratic governance in an age of unprecedented technological change.

The window for effective action is open, but it will not remain so indefinitely. The next five years will likely determine whether AI becomes a tool for democratic enhancement or a catalyst for democratic decay. The responsibility for ensuring a positive outcome lies with all of us—as citizens, as members of democratic societies, and as participants in the ongoing experiment of democratic governance.

References

[1] Pew Research Center. “Public Trust in Government: 1958-2024.” June 24, 2024. https://www.pewresearch.org/politics/2024/06/24/public-trust-in-government-1958-2024/

[2] Gallup. “Confidence in Institutions.” Historical Trends. https://news.gallup.com/poll/1597/confidence-institutions.aspx

[3] Knight First Amendment Institute. “We Looked at 78 Election Deepfakes. Political Misinformation Is Not an AI Problem.” https://knightcolumbia.org/blog/we-looked-at-78-election-deepfakes-political-misinformation-is-not-an-ai-problem

[4] Harvard Kennedy School Misinformation Review. “The origin of public concerns over AI supercharging misinformation in the 2024 U.S. presidential election.” January 30, 2025. https://misinforeview.hks.harvard.edu/article/the-origin-of-public-concerns-over-ai-supercharging-misinformation-in-the-2024-u-s-presidential-election/

[5] George Washington University Graduate School of Political Management. “Post-Election Poll Study Reveals Deepening Distrust in Government and Information Sources.” December 11, 2024. https://gspm.gwu.edu/post-election-poll-study-reveals-deepening-distrust-government-and-information-sources

[6] Brennan Center for Justice. “How to Detect and Guard Against Deceptive AI-Generated Election Information.” https://www.brennancenter.org/our-work/research-reports/how-detect-and-guard-against-deceptive-ai-generated-election-information

[7] Oxford Internet Institute. “The effectiveness of large language models in political microtargeting assessed in new study.” June 26, 2024. https://www.ox.ac.uk/news/2024-06-26-effectiveness-large-language-models-political-microtargeting-assessed-new-study

[8] Harvard Ash Center. “The apocalypse that wasn’t: AI was everywhere in 2024’s elections, but deepfakes and misinformation were only part of the picture.” December 4, 2024. https://ash.harvard.edu/articles/the-apocalypse-that-wasnt-ai-was-everywhere-in-2024s-elections-but-deepfakes-and-misinformation-were-only-part-of-the-picture/

[9] Stanford Graduate School of Business. “Wreck the Vote: How AI-Driven Misinformation Could Undermine Democracy.” October 16, 2024. https://www.gsb.stanford.edu/insights/wreck-vote-how-ai-driven-misinformation-could-undermine-democracy

[10] Associated Press. “Most Americans don’t trust AI-powered election information: AP-NORC-USAFacts survey.” https://www.ap.org/news-highlights/spotlights/2024/most-americans-dont-trust-ai-powered-election-information-ap-norc-usafacts-survey/

[11] National Conference of State Legislatures. “Artificial Intelligence (AI) in Elections and Campaigns.” https://www.ncsl.org/elections-and-campaigns/artificial-intelligence-ai-in-elections-and-campaigns

[12] NPR. “Deepfakes, memes and artificial intelligence played a big role in the 2024 election.” December 21, 2024. https://www.npr.org/2024/12/21/nx-s1-5220301/deepfakes-memes-artificial-intelligence-elections

[13] Digiday. “How AI shaped the 2024 election, from ad strategy to voter sentiment analysis.” https://digiday.com/media-buying/how-ai-shaped-the-2024-election-from-ad-strategy-to-voter-sentiment-analysis/

[14] Brennan Center for Justice. “Generative AI and Political Advertising.” https://www.brennancenter.org/our-work/research-reports/generative-ai-political-advertising

Appendices

Appendix A: Methodology Details

Data Collection Methods

- Systematic review of academic literature on AI and democracy

- Analysis of polling data from major organizations (Pew, Gallup, AP-NORC)

- Case study analysis of 2024 election AI implementations

- Content analysis of AI-generated political content

- Expert interviews with researchers, practitioners, and policymakers

Analytical Framework

- Multi-domain impact model development

- Scenario planning methodology

- Probability assessment techniques

- Cross-validation of findings across multiple sources

Limitations

- Rapidly evolving technological landscape

- Limited data on long-term impacts

- Difficulty isolating AI effects from other factors

- Potential bias in available research and reporting

Appendix B: Technical Specifications

AI Detection Technologies

- Current capabilities and limitations of detection systems

- Accuracy rates for different types of AI-generated content

- Comparison of commercial and open-source detection tools

- Technical challenges in detection system development

Content Authentication Systems

- Blockchain-based provenance tracking

- Digital watermarking technologies

- Cryptographic verification methods

- Interoperability standards and challenges

Platform Governance Mechanisms

- Content moderation policy frameworks

- Automated detection and response systems

- Human-AI hybrid moderation approaches

- Appeals and oversight processes

Appendix C: International Comparisons

European Union Approaches

- AI Act implications for political content

- Digital Services Act enforcement mechanisms

- Member state variations in AI governance

- Cross-border cooperation frameworks

Other Democratic Countries

- Canada’s approach to AI in elections

- Australia’s regulatory framework development

- United Kingdom’s AI governance strategy

- Comparative analysis of democratic responses

Authoritarian Uses of AI

- China’s AI-powered information control

- Russia’s AI-enabled disinformation campaigns

- Iran’s AI applications in domestic and international contexts

- Lessons for democratic defense strategies

Appendix D: Stakeholder Analysis

Government Stakeholders

- Federal agencies and their roles

- State and local election officials

- Legislative bodies and oversight committees

- International cooperation mechanisms

Technology Industry

- Major platform companies and their policies

- AI development companies and their responsibilities

- Startup ecosystem and innovation dynamics

- Industry association roles and standards

Civil Society Organizations

- Democracy and governance organizations

- Technology policy advocacy groups

- Media literacy and education organizations

- Academic research institutions

International Organizations

- United Nations and AI governance initiatives

- OECD democracy and technology programs

- Regional organizations and cooperation frameworks

- Multilateral treaty and agreement possibilities