The release of GPT-5 marks a new phase in the AI model market—not just in terms of capability, but also in competitive pricing. OpenAI’s new flagship is pitched squarely against Google’s Gemini 2.5 Pro, Anthropic’s Claude Opus 4.1, and other top-tier large language models (LLMs). The battle for enterprise AI adoption is no longer just about performance; it’s a race to deliver the best price-to-capability ratio in an environment where API usage can quickly scale into thousands—or millions—of dollars per month.

In this article, we’ll compare GPT-5’s API costs against its OpenAI siblings and the leading competition from Google, Anthropic, Cohere, Mistral, and Meta, and examine what the numbers mean for businesses and developers.

1. The Core Pricing Structure

API pricing is typically billed per million tokens, split into input tokens (prompts) and output tokens (generated content). While input is often cheaper, output costs can balloon depending on your model choice and workload.

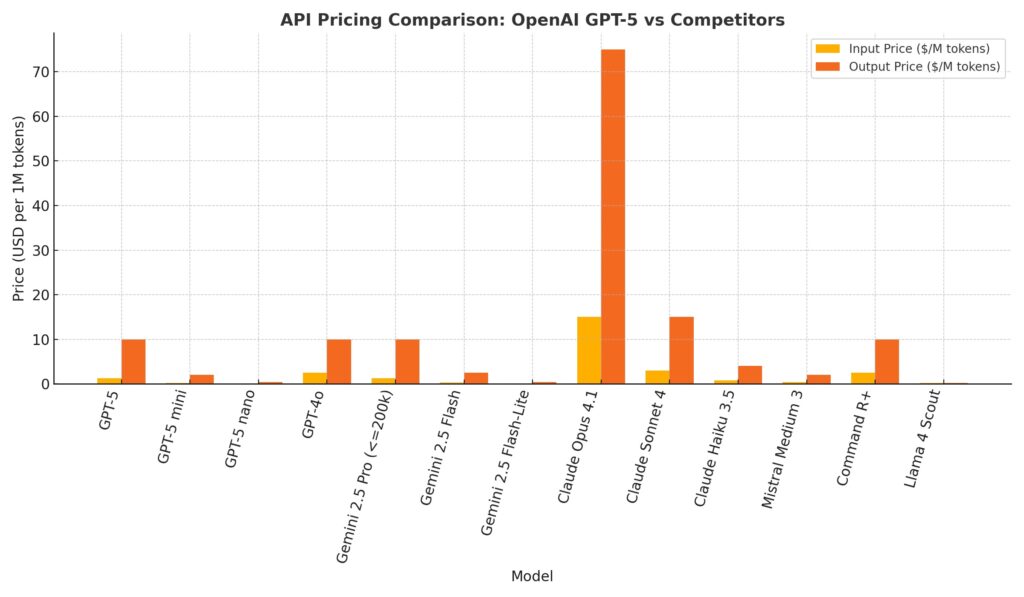

Here’s the current per-million token pricing for flagship and mid-tier models:

| Vendor | Model | Input ($) | Output ($) | Context Window |

|---|---|---|---|---|

| OpenAI | GPT-5 | 1.25 | 10.00 | 400k |

| GPT-5 mini | 0.25 | 2.00 | 200k | |

| GPT-5 nano | 0.05 | 0.40 | 128k | |

| GPT-4o | ~2.50 | ~10.00 | 128k | |

| Gemini 2.5 Pro | 1.25 | 10.00 | ≤200k same as GPT-5; >200k higher | |

| Gemini 2.5 Flash | 0.30 | 2.50 | 128k | |

| Gemini 2.5 Flash-Lite | 0.10 | 0.40 | 64k | |

| Anthropic | Claude Opus 4.1 | 15.00 | 75.00 | 200k |

| Claude Sonnet 4 | 3.00 | 15.00 | 200k | |

| Mistral | Mistral Medium 3 | 0.40 | 2.00 | 128k |

| Cohere | Command R+ | 2.50 | 10.00 | 128k |

| Meta (via providers) | Llama 4 Scout | ~0.18 blended | ~0.18 blended | varies |

2. GPT-5’s Price Positioning

GPT-5’s $1.25 input / $10 output price matches Gemini 2.5 Pro in the ≤200k context tier. It is far cheaper than Anthropic’s Claude Opus 4.1, which comes in at ~12× more expensive for input and ~7.5× more expensive for output.

Where GPT-5 becomes especially attractive is at large context windows—Google increases Pro pricing for >200k contexts, while OpenAI keeps GPT-5 flat at $1.25 / $10.

3. The Budget Tiers: Mini and Nano

For many workloads, you don’t need the absolute top-tier reasoning of GPT-5. That’s where GPT-5 mini ($0.25 / $2) and GPT-5 nano ($0.05 / $0.40) shine. They compete directly with Gemini Flash and Flash-Lite, and also undercut Mistral Medium 3’s already aggressive pricing.

If your use case is:

- Short-form customer support

- Simple classification tasks

- Low-complexity content generation

…then mini or nano can slash costs by 80–95% over flagship pricing.

4. Monthly Cost Scenarios

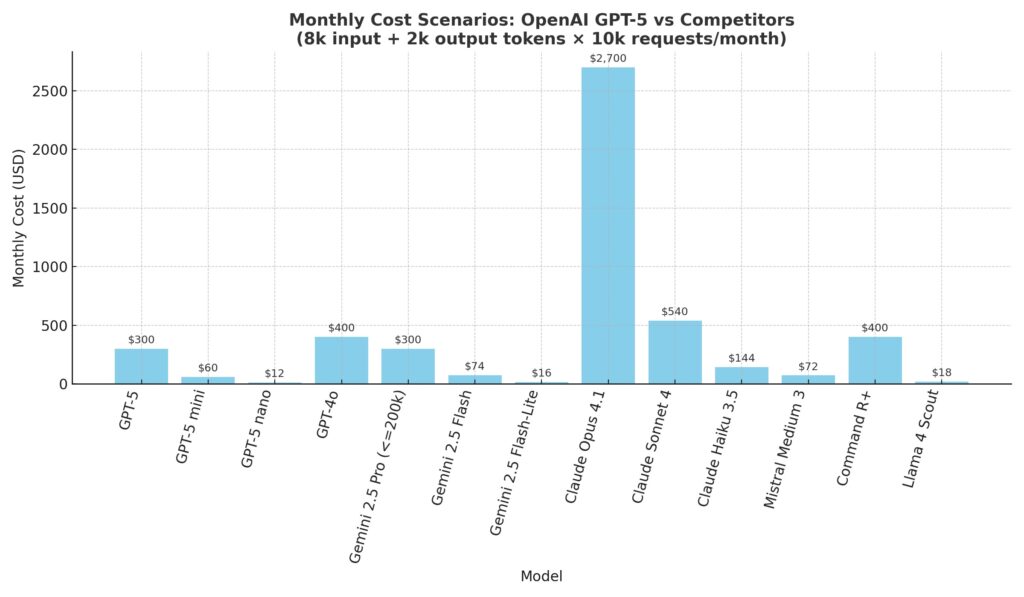

Let’s take a realistic example:

- 8,000 input tokens per request

- 2,000 output tokens per request

- 10,000 requests per month

Here’s what you’d pay monthly:

| Model | Monthly Cost (USD) |

|---|---|

| GPT-5 mini | $60 |

| GPT-5 | $300 |

| Gemini 2.5 Pro (≤200k) | $300 |

| Claude Opus 4.1 | $2,700 |

The gap between Claude Opus 4.1 and GPT-5 is stark—over 9× more expensive per month for the same workload.

5. Beyond the Sticker Price

When comparing API costs, you need to consider:

- Token efficiency – Some models require fewer tokens to produce equivalent output, effectively lowering your cost per task.

- Hidden “thinking” tokens – Gemini 2.5 and potentially GPT-5 count reasoning steps as output tokens, inflating bills for complex tasks.

- Prompt caching – Anthropic’s Claude models offer caching discounts, which can be valuable for repetitive prompts.

- Throughput & latency – A cheaper model that responds faster can lower operational costs when scaled to thousands of concurrent requests.

6. Which Model Should You Choose?

Choose GPT-5 if:

- You need flagship quality at a competitive price

- Your context size stays under 400k tokens

- You want parity pricing with Gemini 2.5 Pro but prefer OpenAI’s ecosystem

Choose GPT-5 mini or nano if:

- You prioritize cost-efficiency over cutting-edge reasoning

- Your use cases are high-volume but low-complexity

Choose Gemini 2.5 Pro if:

- You are already in Google’s cloud ecosystem

- You want integrated multimodal support with large context at Pro-tier quality

Choose Claude Opus 4.1 if:

- You require exceptional reasoning and are willing to pay a premium

- You can take advantage of prompt caching to mitigate costs

Conclusion

In 2025, the LLM market is more price-competitive than ever. GPT-5’s pricing strategy is clearly aimed at taking market share from Google’s Gemini Pro tier, while its mini and nano variants aggressively undercut mid-tier and budget competitors.

For most businesses, GPT-5 offers a sweet spot of cost and capability. But the real savings come when you precisely match the model tier to the complexity of your workload—and keep a close eye on how output tokens (especially “thinking” ones) impact your bottom line.