OpenAI’s GPT-5 has officially launched, marking the company’s most advanced, capable, and safe AI model to date. While CEO Sam Altman says it’s still not full AGI (Artificial General Intelligence), many see it as a major step toward that goal—similar to going from standard display to “retina” resolution in terms of intelligence.

Universal Access — No More Model Switching

One of the most noticeable changes is model routing. Instead of picking between GPT-4, GPT-4o, or GPT-o1, users simply interact with GPT-5. The system automatically routes your request to the right variant:

- Lightweight version for quick or simple queries

- High-depth “thinking” mode for complex reasoning or multi-step problem solving

- Specialized variants for Pro subscribers

This approach removes friction for end-users while still delivering the right balance of speed, quality, and cost.

Pricing & Tiers

The release introduces three core model tiers for developers and enterprise:

| Model | Input Price (per 1M tokens) | Output Price (per 1M tokens) | Context Limit |

|---|---|---|---|

| GPT-5 | $1.25 | $10.00 | 400k tokens |

| GPT-5 Mini | $0.25 | $2.00 | 200k tokens |

| GPT-5 Nano | $0.05 | $0.40 | 128k tokens |

Key implications:

- Free ChatGPT users get GPT-5 Mini for most tasks (with GPT-5 for heavier queries).

- Pro plan (~$200/month): Unlocks unlimited GPT-5, GPT-5 Pro, and a “thinking” version with longer reasoning chains and fewer hallucinations.

- API developers can mix and match tiers to balance performance and budget.

Major Capability Upgrades

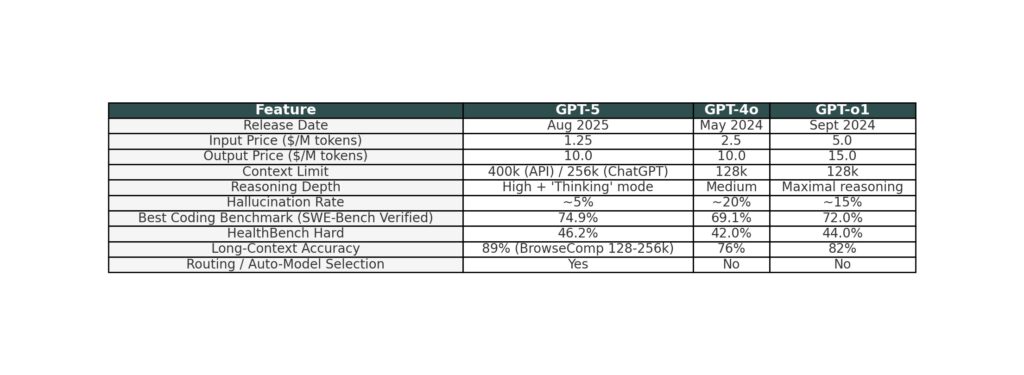

Coding

- Outperforms previous models on SWE-Bench Verified (74.9%) and Aider Polyglot (88%) benchmarks.

- Generates full front-end UIs in one pass, with more aesthetic and accessible code.

- Better at debugging across large repos, following style guides, and suggesting refactors.

Reasoning & Problem Solving

- “Thinking” mode improves multi-step logical reasoning.

- Hallucination rate reduced from ~20% to ~5% in controlled benchmarks.

- Handles long-context problems with up to 256,000 tokens (in ChatGPT) or 400,000 (API Pro tier).

Health Applications

- More proactive: asks follow-up questions, flags inconsistencies.

- Improved performance on HealthBench Hard (46.2%), a significant jump from prior models.

- Lower risk of giving unsafe or misleading medical advice.

New Personalization & Integration Features

In ChatGPT:

- Personas — choose a style (“Cynic,” “Encourager,” “Robot,” etc.)

- UI customization — change interface color themes

- Google integrations — connect Gmail & Google Calendar for context-aware responses (e.g., summarizing meetings, drafting follow-up emails)

- Memory improvements — GPT-5 can retain more long-term context across sessions

Safety & Trust

Safety is a major focus:

- Sycophancy reduction — less likely to agree blindly with incorrect statements

- Ambiguity handling — asks clarifying questions instead of guessing

- Unsafe prompt handling — more nuanced completions that still provide value without crossing safety lines

- Audit logs — enterprise deployments get improved logging for compliance

Developer-Focused Improvements

- Custom tools with natural language inputs — APIs can now process text instead of requiring strict JSON

- Verbosity controls — force concise or elaborate output

- Reasoning effort parameter — trade-off between speed and accuracy

- Prompt caching (API) — reduce repeated token costs for recurring system prompts

- Streaming outputs — real-time partial responses for faster perceived performance

Why This Matters for Businesses

The price-performance ratio means companies can:

- Replace multiple specialized models with GPT-5 tiers

- Deploy customer support bots that are faster and less error-prone

- Power agent workflows that chain tools and handle complex logic without breaking mid-task

- Integrate long-document analysis into pipelines without manual chunking

Bottom Line

GPT-5 is not AGI, but it’s a more trustworthy, more capable, and more adaptable AI than anything OpenAI has shipped before. For regular users, it feels more human-like and dependable. For developers and enterprises, it’s a platform upgrade — with better reasoning, massive context support, strong safety features, and flexible cost tiers.