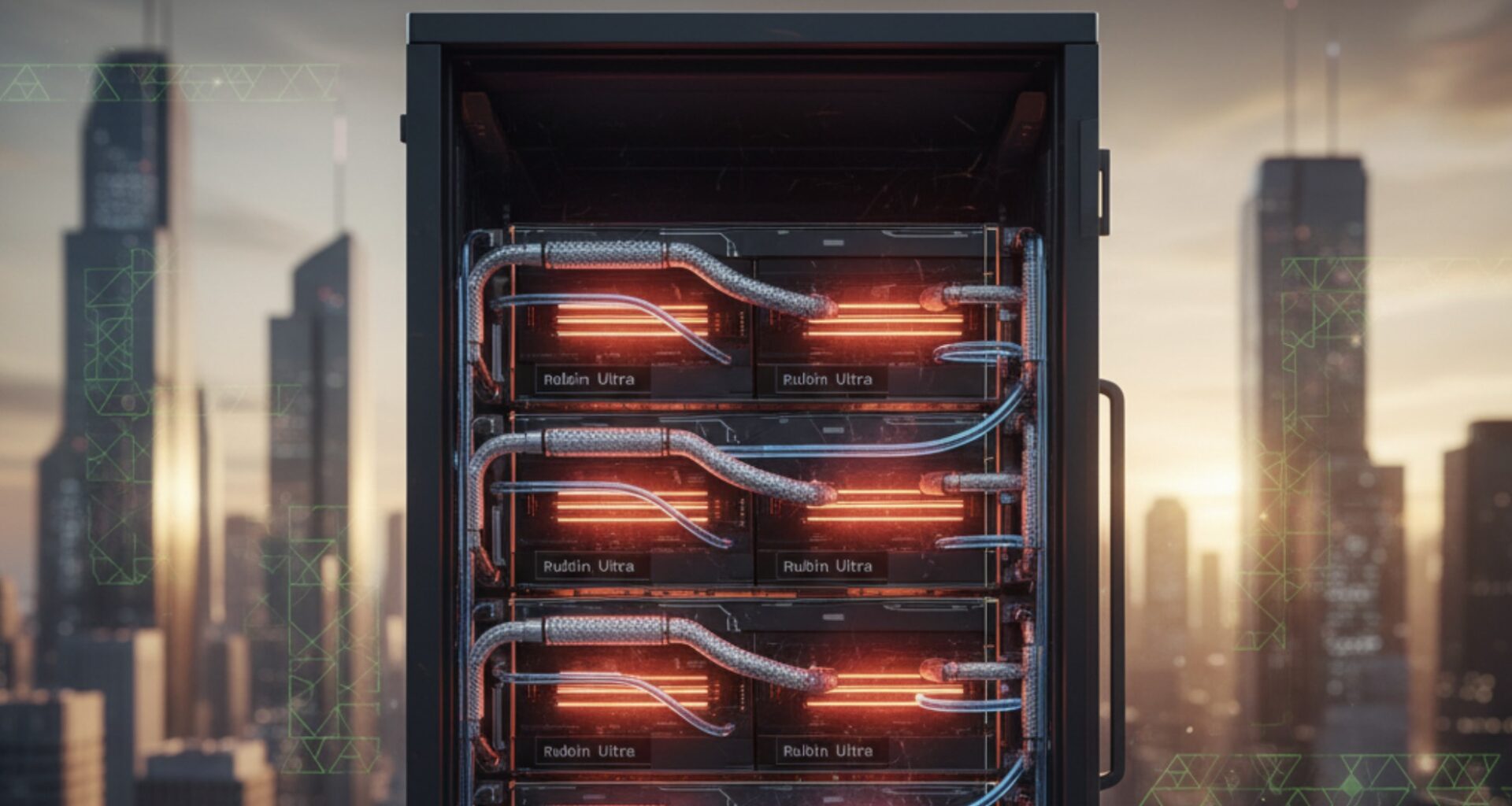

In 2027, NVIDIA’s “Rubin Ultra” platform may mark not just a generational leap in performance but a fundamental break with the physical assumptions that have guided computing for decades. Early indications from industry roadmaps suggest each Rubin Ultra GPU could draw as much as 2.3 kilowatts, with full Kyber-rack configurations approaching 600 kilowatts. These are no longer computers in the classical sense—they are AI furnaces, reshaping the economics of heat, power, and data.

1. The Physics Wall Arrives

For years, GPU architects have danced around the limits of thermodynamics. Every node shrink brought higher transistor counts but also escalating power density. With Hopper and Blackwell already pushing 1 kW, Rubin Ultra crosses a symbolic and practical threshold: cooling becomes as critical as computation. Traditional cold plates and airflow no longer suffice. Instead, micro-channel liquid cooling—essentially plumbing precision heat exchangers directly into the silicon—is emerging as the default design philosophy.

The implications ripple outward. Data centers are evolving from air-cooled rooms to liquid-loop ecosystems, integrating high-flow manifolds, dielectric fluids, and chiller-to-chip loops. The line between data infrastructure and chemical plant continues to blur.

2. Power Architecture Rewritten

Feeding a 600 kW rack forces a re-think of electrical delivery. NVIDIA’s forthcoming 800 V DC “AI Factory” architecture is more than an efficiency tweak—it is a survival mechanism. By shifting to high-voltage direct current, the company reduces conversion losses and enables denser power delivery to GPUs while simplifying rack design.

However, these racks no longer plug into conventional data halls. They demand substation-grade power infrastructure, with localized transformers, new busbar materials, and AI-optimized load-balancing. In effect, the AI industry is building micro-utilities around compute clusters.

3. Cooling Becomes a Core Competitive Advantage

In the age of 2.3 kW GPUs, thermal design is strategy. Cloud providers and hyperscalers are investing in proprietary cooling IP—Google’s immersion systems, Microsoft’s liquid datacenter islands, and Tesla’s in-house “Thermal Stacks.” Rubin Ultra amplifies this arms race.

Expect specialized vendors to emerge around microfluidic packaging, phase-change materials, and predictive thermal control algorithms. In parallel, the energy recovery side—turning waste heat into usable energy—will become a defining metric of efficiency and sustainability.

As one semiconductor engineer noted privately, “In the Rubin era, watts are the new FLOPs.”

4. The Economic and Environmental Dimension

Every leap in AI capability now carries a thermodynamic price tag. A single Kyber rack could consume enough electricity to power hundreds of homes. Multiplied across thousands of installations, the global AI sector’s energy footprint risks rivaling that of medium-sized nations.

This pressure will accelerate two parallel forces:

- Infrastructure regionalization – hyperscalers locating data centers near renewable energy and water resources (Iceland, Canada, or northern Europe).

- Compute efficiency innovation – from photonic interconnects to neural compression models that reduce inference energy per token.

Ultimately, NVIDIA’s roadmap is not just an engineering story—it’s an economic signal. The next phase of AI expansion will hinge as much on energy policy and thermal management as on transistor design.

5. The Dawn of the Thermodynamic Internet

If Blackwell represented the scaling of intelligence, Rubin Ultra represents the scaling of entropy management. Future AI facilities will operate as living systems—balancing heat, water, power, and compute in real time. The invisible substrate of AI progress will be thermodynamic governance: how efficiently we convert watts into reasoning.

The 2.3 kW GPU is more than a chip—it’s the moment computing admits that physics has joined the design team.

Top picks for "nvidia rubin ultra"

Open Amazon search results for this keyword.

As an affiliate, we earn on qualifying purchases.