A year into the public diffusion of modern generative AI, a basic question still nags managers, workers, and policymakers: Which parts of work are people actually doing with AI—and what does that imply for different occupations? Tomlinson, Jaffe, Wang, Counts, and Suri tackle this head‑on by analyzing 200,000 anonymized Bing Copilot conversations from U.S. users in 2024. They transform messy, everyday interactions into a data‑driven portrait of how generative AI maps onto work activities and occupations, and they assemble an “AI applicability” score that estimates where today’s systems are most relevant to job tasks. arXiv+1

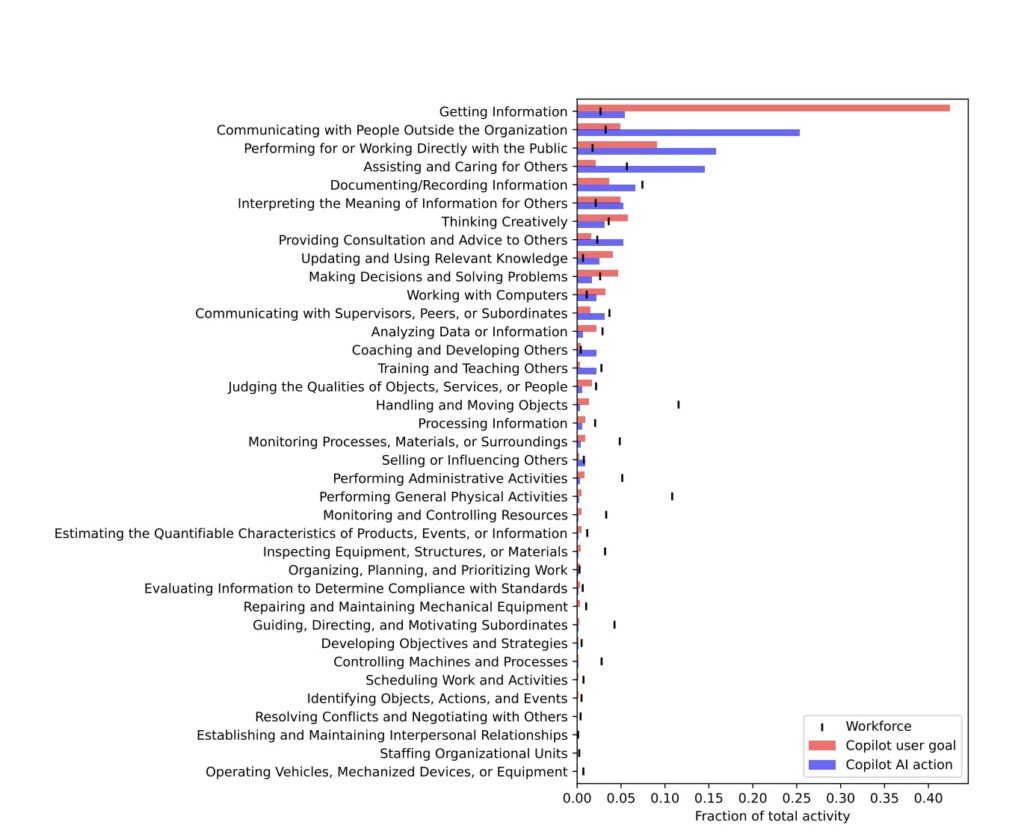

From prompts to “work”: user goals vs. AI actions

A central conceptual move in the paper is to separate what the user is trying to accomplish (“user goals”) from what the AI actually does in the exchange (“AI actions”). That distinction matters because AI often supports a task without performing it. For example, when a user is trying to fix a printer (the user’s goal: operating office equipment), the AI’s action is training or advising. In their data, the set of activities assisted by AI and the set performed by AI are disjoint in roughly 40% of conversations, underscoring that augmentation and automation are not the same phenomenon. arXiv

To anchor these conversations in the labor market, the authors classify each exchange using O*NET’s hierarchical taxonomy of work, focusing on Intermediate Work Activities (IWAs)—generalizable activities that cut across occupations (332 IWAs in total). This choice avoids the noise of trying to infer a user’s job from a single chat and lets the authors ask a cleaner question: Which generic activities are people leveraging AI for? arXiv

Data and measurement

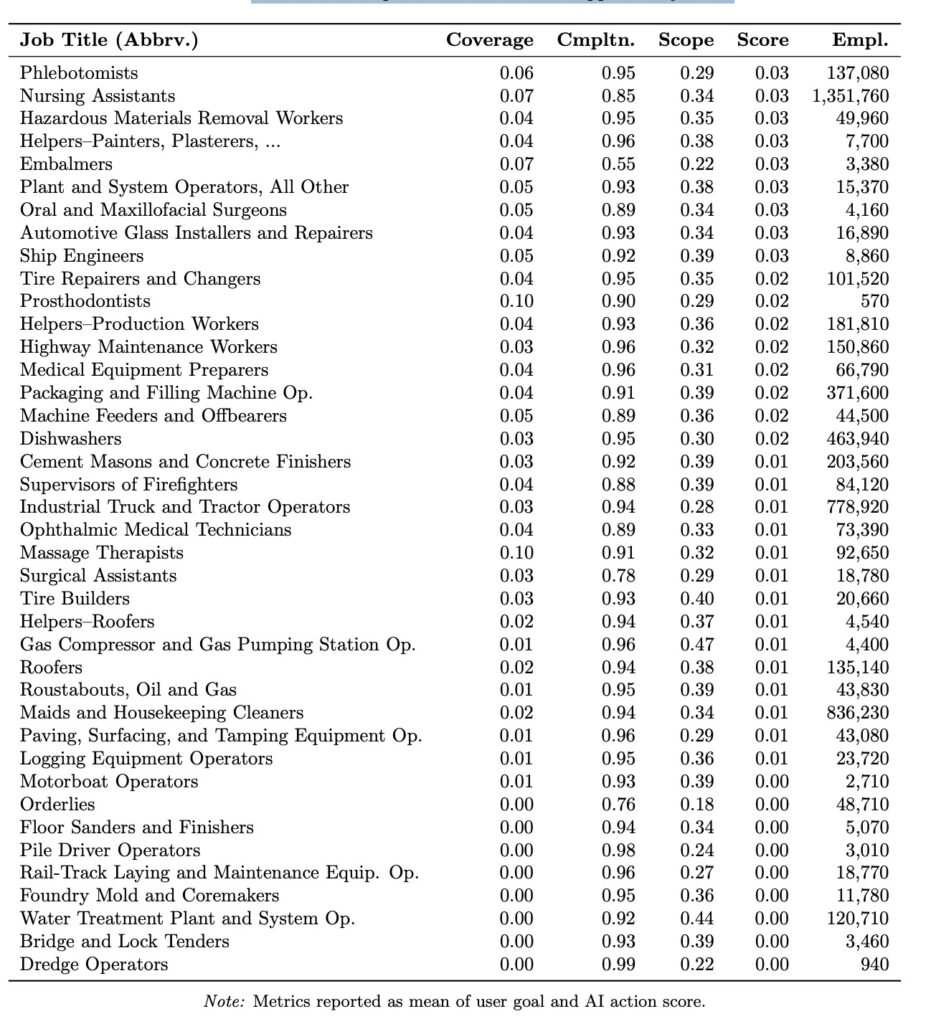

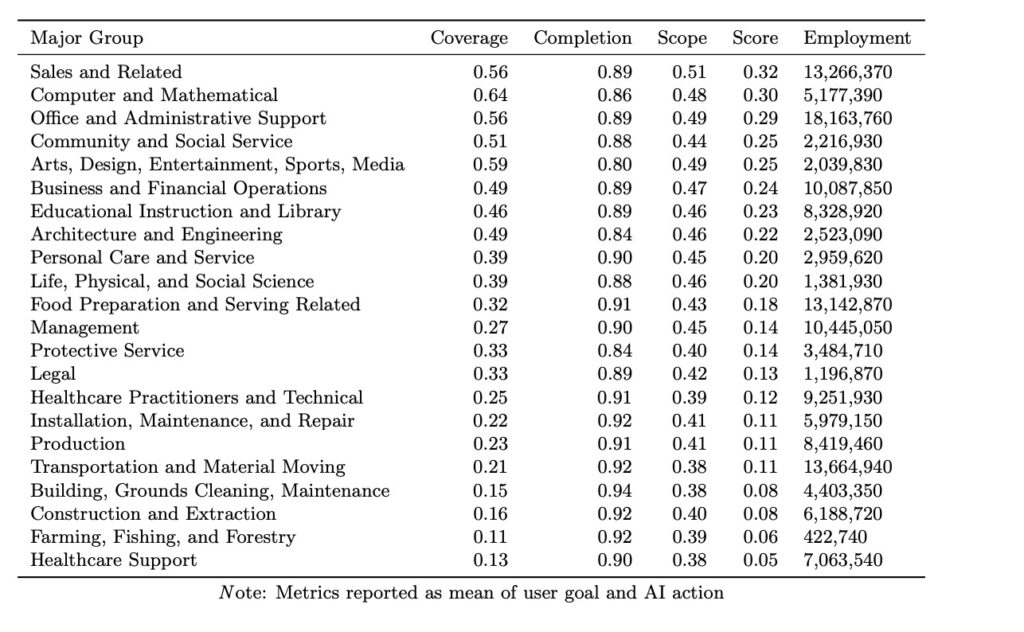

The analysis draws on two U.S. samples spanning Jan–Sep 2024: a representative Copilot‑Uniform set (~100k conversations) and a Copilot‑Thumbs set (~100k conversations that include thumbs‑up/down reactions). Using a GPT‑4o–based pipeline, the authors label user goals and AI actions with all relevant IWAs per conversation. They then train a lighter LLM (GPT‑4o‑mini) to judge task completion, and they estimate an impact scope (how broad a share of an activity the AI contributed to) on a six‑point scale. These ingredients feed a single AI applicability score per occupation that blends (i) coverage of an occupation’s activities in Copilot data (thresholded at 0.05% of activity share), (ii) completion rates, and (iii) scope. The score is weighted by O*NET relevance so that activities more central to an occupation count more. arXiv+1

What people actually do with AI

In real use, information gathering and writing dominate as user goals, followed by communicating with others. On the AI side, the most common actions are providing information and assistance, writing, and teaching/advising—that is, AI often functions as a coach, explainer, or service agent to the human. These are also the activities with the highest satisfaction and completion rates, suggesting practical usefulness at the point of work. arXiv

The team validates their success metrics by showing a strong correlation between IWA‑level satisfaction and completion (weighted r ≈ 0.83 for user goals and 0.76 for AI actions). At the conversation level, thumbs‑up and completion correlate but are noisier (r ≈ 0.28), so the study relies more on completion when constructing applicability. They also find that impact scope—how much of an activity the AI covers—captures a different dimension than success and is a better predictor of which activities people seek assistance for. arXiv

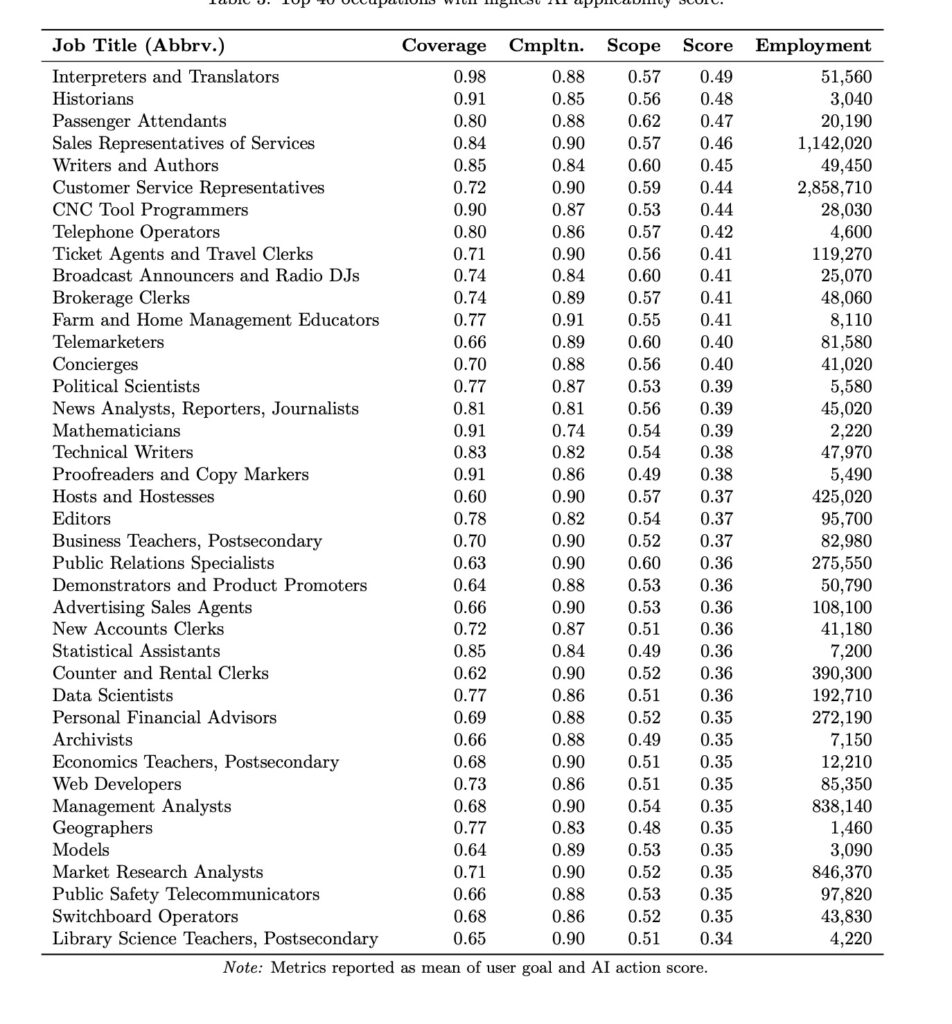

Where applicability is highest (and narrowest)

Aggregating from activities to occupations reveals a clear pattern: knowledge‑ and communication‑heavy roles—especially in Sales; Computer & Mathematical; Office & Administrative Support; Community & Social Service; Arts/Design/Media; Business & Financial Operations; and Educational Instruction & Library—show the highest AI applicability. In contrast, occupations anchored in physical manipulation, machinery, or on‑site presence display narrower applicability: AI can advise or plan but cannot perform the core physical work. arXiv+1

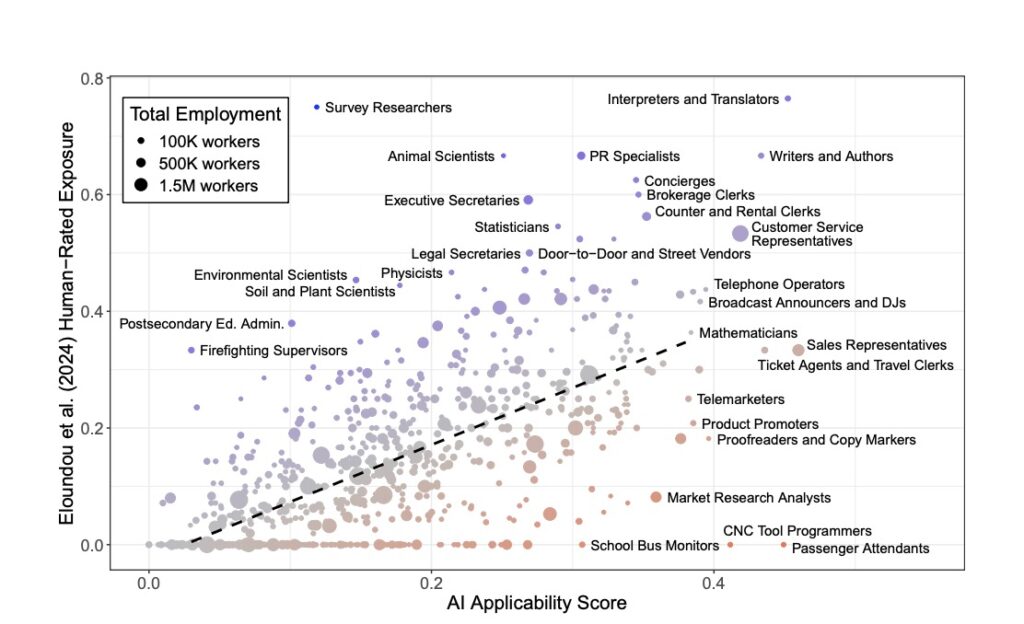

How the results compare to ex‑ante predictions

The authors compare their usage‑based metric to ex‑ante exposure estimates from Eloundou et al. (asking whether LLMs could cut task time by 50% or more). Despite measuring different things, the two line up surprisingly well: occupation‑level correlation of r ≈ 0.73, rising to 0.91 when aggregated to major occupation groups. Outliers are revealing: for instance, Market Research Analysts and CNC Tool Programmers appear more applicable in usage than predicted, whereas roles like Passenger Attendants and School Bus Monitors look less applicable, reminding us that how people actually use tools can surprise prior judgment. arXiv

Socioeconomic correlates: wages and education

Contrary to a simple “only high‑wage jobs are affected” narrative, the study finds a weak overall relationship between AI applicability and average wage (employment‑weighted r ≈ 0.07; modestly higher when excluding the very top wages). At the same time, occupations whose modal requirement is a Bachelor’s degree show higher applicability on average than those with lower requirements (employment‑weighted mean ≈ 0.27 vs. 0.19), with considerable overlap across the distribution. The stronger signal on the AI‑action side suggests that AI is more often performing parts of activities in bachelor‑level roles, while in other roles it may be assisting more narrowly. arXiv+1

Augmentation, automation, and the limits of inference

By distinguishing user goals from AI actions, the paper avoids conflating capability with organizational choice. Even if AI can perform portions of an activity, whether firms redeploy labor (augment) or reduce it (automate) is a separate, downstream decision—and the authors do not observe those decisions. Their data describe capabilities in use, not time saved or employment effects; they also caution that overlap between AI capabilities and an occupation’s activities is often moderate rather than complete. In short: high applicability flags where AI can help today, but not how employers will reshape work tomorrow. arXiv+1

Strengths, limits, and what to do with the findings

Methodologically, the study’s strengths include (1) behavioral evidence from at‑scale, real‑world use; (2) a task‑based framework grounded in O*NET; and (3) validation that combines user feedback with LLM‑judged completion and an explicit measure of scope. Still, several cautions apply. The data come from one mainstream assistant (Copilot) in one country and time window; usage reflects that product’s features and user base. The mapping from conversations to activities depends on LLM classifiers (albeit validated), and the 0.05% coverage threshold and weighting choices, while sensible, are still modeling decisions. Finally, the approach cannot observe firm‑level process change, time savings, or job redesign. These caveats don’t undercut the contribution; they delimit what the results do and do not claim. arXiv+2arXiv+2

Practical implications

For workers, the results suggest upskilling payoffs in activities where applicability is already high: research and synthesis, drafting and revision, customer communication, and structured advising. For managers, the disjunction between user goals and AI actions is a design opportunity: workflows that position AI as coach, pre‑writer, and info‑gatherer can deliver immediate gains without pretending the system will do the entire job. For educators and training programs, bachelor‑level curricula should explicitly teach “AI‑aware” versions of writing, analysis, and communication—skills the study shows are already being amplified in the wild. For policymakers, the weak wage gradient cautions against assuming AI affects only elite occupations; exposure is broader and more varied, especially in large, lower‑wage occupational groups like Sales and Office/Admin Support.

Bottom line

This paper replaces speculation with measurement. In actual use, generative AI is strongest today at finding, structuring, and explaining information and at drafting and revising text. Those capabilities most clearly touch knowledge‑ and communication‑centric occupations, yet nearly every occupational group shows some potential, often via augmentation rather than full automation. The method—mapping live AI use onto a task taxonomy and tracking completion and scope—offers a durable way to monitor the moving frontier of AI’s relevance to work over time. arXiv+1

Sources: Tomlinson, Jaffe, Wang, Counts, & Suri, “Working with AI: Measuring the Occupational Implications of Generative AI,” arXiv:2507.07935 (2025). Citations correspond to paper pages for abstract and study overview, data/methods, results, figures, and discussion.