By Thorsten Meyer | ThorstenMeyerAI.com | February 2026

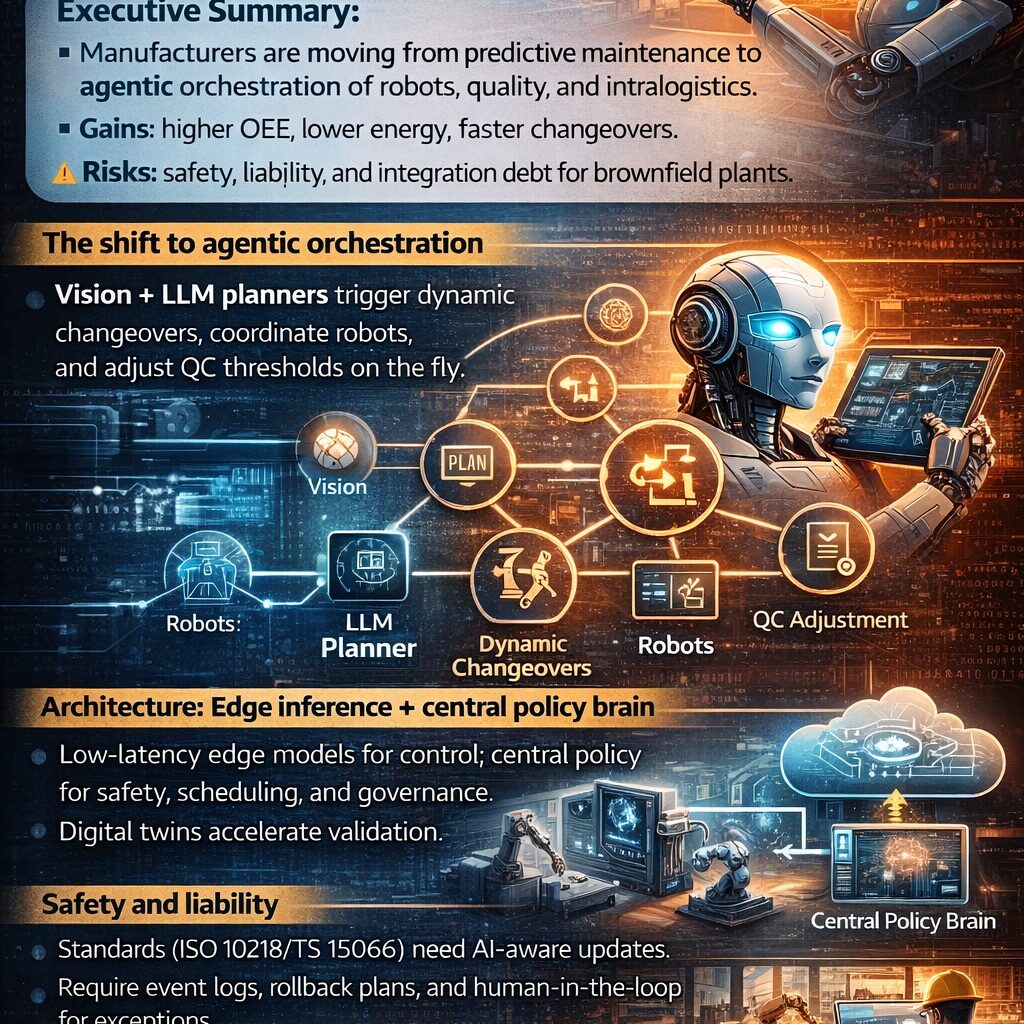

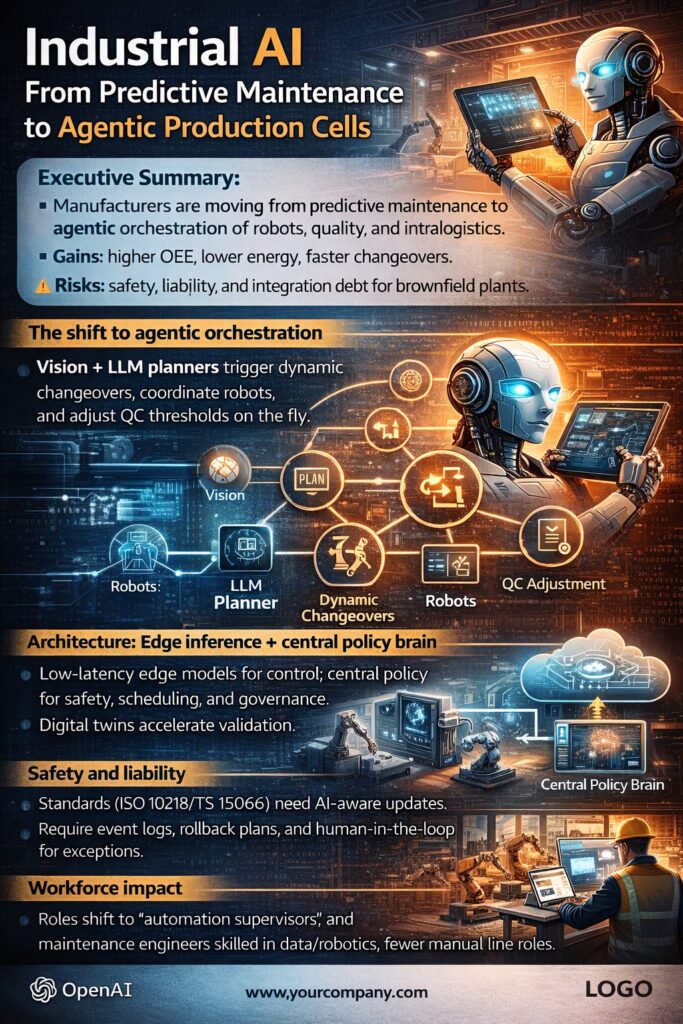

Executive Summary

For the past decade, “AI in manufacturing” meant one thing: predictive maintenance. Bolt a vibration sensor to a motor, train a model on failure data, get an alert before the bearing seizes. Useful. Incremental. And no longer anywhere close to the frontier.

The manufacturers who will dominate the next cycle are not the ones predicting when machines break. They’re the ones building agentic production cells—autonomous systems where vision models, LLM-based planners, and robotic actuators coordinate in real time to run changeovers, adjust quality thresholds, and orchestrate intralogistics without waiting for a human to click “approve.”

The gains are not theoretical. OEE improvements of 5–15%. Energy consumption down 15–20%. Changeover times collapsing from hours to minutes. Unplanned downtime cut by up to 50%.

But the risks are equally real: safety frameworks written for dumb robots, liability regimes that assume a human pressed the button, and decades of integration debt buried in brownfield plants running on PLCs older than the engineers maintaining them.

This is not a technology story. It’s an organizational reckoning.

The Predictive Maintenance Ceiling

Let’s give predictive maintenance its due. The global market hit $10.93 billion in 2024 and is on track to exceed $70 billion by 2032. Companies deploying AI-driven predictive maintenance report 25% lower maintenance costs, 10–20% higher uptime, and ROI of 10:1 within two years. Deloitte’s numbers. Not vendor slides.

But here’s the problem: predictive maintenance is passive. It tells you what will fail. It doesn’t decide what to do about it. It doesn’t reschedule production. It doesn’t reroute material flow. It doesn’t negotiate priorities across competing production orders. It sends an alert and waits for a human.

In a world where Deloitte predicts agentic AI adoption in manufacturing will quadruple—from 6% to 24%—by end of 2026, “alerting and waiting” is a competitive liability. The AI market in manufacturing is projected to surge from $8.57 billion in 2025 to $230.95 billion by 2034, a CAGR of 44.2%. The money is moving from monitoring to acting.

The question is no longer can we predict failures? It’s can we build systems that respond to the entire production environment autonomously?

The Shift to Agentic Orchestration

What “Agentic” Actually Means on a Factory Floor

Drop the buzzwords for a moment. An agentic production cell is a system that:

- Perceives its environment through vision systems, IoT sensors, and digital twins

- Reasons about what to do using LLM-based planners that understand production context

- Acts by coordinating robots, conveyors, quality inspection, and material handling

- Learns from outcomes to improve future decisions

This is not a chatbot bolted onto a SCADA system. This is a fundamentally different architecture where the AI doesn’t assist the operator—it replaces the decision loop for routine operations while escalating genuine exceptions to humans.

Vision + LLM Planners: The New Control Paradigm

The combination of computer vision and large language models is what makes this possible. A recent framework published in Scientific Reports demonstrates LLM-controlled changeover robots that automatically complete production line changeovers—addressing the manual intervention, low efficiency, and high error rates that plague traditional approaches.

Here’s how it works in practice:

- Vision models running at 90+ frames per second detect product variants, defects, and positional deviations on the line

- LLM planners interpret the visual data alongside production schedules, quality requirements, and equipment states to generate action plans

- Robotic actuators execute the plans—adjusting grippers, changing tools, repositioning fixtures—without waiting for a recipe change ticket

BMW is already there. Their Spartanburg plant uses AI inspection and assembly support that checks component placement and corrects misaligned parts in real time. Savings: over $1 million per year at a single plant.

Toyota improved forecast accuracy by 20% and planner productivity by 18% using agentic approaches.

These aren’t pilot programs. These are production systems generating measurable P&L impact.

Dynamic Changeovers: The Killer Application

If you want to understand why agentic AI matters for manufacturing, look at changeovers.

In traditional manufacturing, switching a production line from Product A to Product B requires:

- A production planner to schedule the change

- An operator to load the new recipe

- A technician to adjust machine parameters

- A quality engineer to validate the first articles

- Typically 30 minutes to 4 hours of lost production

In an agentic production cell:

- The planner agent detects that the current batch is completing and the next order requires a different configuration

- It generates a changeover sequence, coordinating robot movements, tool changes, and parameter adjustments

- Vision systems validate the changeover in real time

- Quality thresholds adjust automatically based on the new product specification

- The line is running again in minutes, not hours

This is where OEE gains come from. Not from squeezing another percentage point out of availability through better failure prediction—but from eliminating the dead time that predictive maintenance never touched.

Architecture: Edge Inference + Central Policy Brain

The Latency Imperative

A robot arm moving at production speed cannot wait 200 milliseconds for a cloud inference to decide whether to grip or release. Industrial AI architectures must split intelligence across two tiers:

| Layer | Function | Latency Requirement | Deployment |

|---|---|---|---|

| Edge | Real-time perception, control decisions, safety interlocks | < 10 ms | On-machine or cell-level NPUs |

| Central | Production scheduling, policy updates, cross-cell optimization, governance | Seconds to minutes | On-premise or private cloud |

Edge AI processors now achieve single-digit millisecond inference latency while consuming 10–20x less power than GPUs. By 2026, an estimated 80% of industrial AI inference will happen at the edge, not in the cloud. This isn’t a trend—it’s physics. You cannot control a physical process from a data center 50 milliseconds away.

The Central Policy Brain

The edge handles reflexes. The central brain handles strategy.

The policy layer manages:

- Production scheduling: Which orders run on which cells, in what sequence, optimized across energy costs, material availability, and delivery commitments

- Safety governance: Enforcing operational envelopes, speed limits, force thresholds—overriding edge decisions that would violate safety constraints

- Quality policy: Setting and adjusting acceptance criteria based on customer specifications, historical defect patterns, and statistical process control

- Cross-cell coordination: Orchestrating material flow between production cells, buffer management, and intralogistics routing

This is where LLMs earn their keep. Not by controlling a servo motor, but by reasoning about production trade-offs in natural language, making decisions that previously required a shift supervisor with 20 years of experience.

Digital Twins: The Validation Accelerator

You cannot test an agentic production cell on a live production line. The cost of a wrong decision at production speed is too high—damaged products, crashed robots, injured operators.

Digital twins solve this. As we enter 2026, digital twin technology has evolved from conceptual models to operational, intelligent systems. A Nature study published in January 2025 demonstrated real-time physics-based co-simulation using edge AI and federated learning—proving that digital twins can validate agentic control policies before they touch physical equipment.

The workflow:

- Train the agentic system in a digital twin that mirrors the physical cell

- Validate against edge cases—product variants, machine degradation, supply disruptions

- Deploy to the physical cell with confidence that the policy has been stress-tested

- Monitor divergence between twin and reality to catch model drift early

Companies skipping this step are playing Russian roulette with production assets worth millions.

Safety and Liability: The Unresolved Crisis

Standards Written for Dumb Robots

ISO 10218 received a major overhaul in April 2025. ISO/TS 15066’s collaborative robot requirements were folded into the new ISO 10218-2:2025. Cybersecurity requirements were added. Functional safety was clarified.

Good progress. Not nearly enough.

Here’s what the updated standards still don’t address:

| What Standards Cover | What Standards Don’t Cover |

|---|---|

| Static safety zones and force limits | Dynamic safety zones that change based on AI decisions |

| Pre-programmed collaborative applications | LLM-planned actions that weren’t explicitly programmed |

| Cybersecurity of the robot controller | Adversarial attacks on vision models that alter perception |

| Functional safety of deterministic systems | Safety verification of non-deterministic neural networks |

The fundamental problem: ISO 10218 assumes the robot’s behavior is predictable because it was explicitly programmed. An agentic production cell generates novel behavior sequences in response to real-time conditions. The safety case for a system whose behavior is emergent, not scripted, requires entirely new verification methodologies.

What Responsible Deployment Requires

Until standards catch up—and they won’t before 2028 at the earliest—manufacturers deploying agentic systems need to self-impose:

1. Comprehensive Event Logging

Every decision the agentic system makes must be logged with full context: sensor inputs, model outputs, action taken, outcome observed. Not for compliance theater—for post-incident reconstruction. When (not if) something goes wrong, you need a forensic trail that explains why the system did what it did.

2. Deterministic Rollback Plans

Every agentic action must have a deterministic fallback. If the LLM planner generates a changeover sequence that fails mid-execution, the system must be able to revert to a known-safe state without human intervention. This means hard-coded safety envelopes that the AI cannot override, regardless of what the model “thinks” is optimal.

3. Human-in-the-Loop for Exceptions

Agentic doesn’t mean unsupervised. The system handles routine operations autonomously. But any action outside the validated operational envelope—unusual sensor readings, novel product variants, equipment behavior outside historical norms—triggers a human review before execution.

The operating principle: autonomy for the predictable, human authority for the novel.

The Liability Gap

Here’s the question nobody wants to answer: when an agentic system makes a decision that causes a defective product, a damaged machine, or an injured worker—who is liable?

- The robot manufacturer who built the hardware?

- The AI vendor who provided the model?

- The systems integrator who configured the cell?

- The plant manager who approved autonomous operation?

Current product liability frameworks weren’t designed for systems that generate novel behavior. And as I’ve written before, the EU’s AI Liability Directive and Product Liability Directive expansion are making this worse, not better—creating legal uncertainty that discourages deployment rather than clarifying accountability.

Manufacturers deploying agentic systems today are operating in a liability gray zone. The smart ones are documenting everything, maintaining human override authority, and building insurance arguments from the ground up. The reckless ones are hoping nothing goes wrong.

Workforce Impact: The Honest Conversation

The Roles That Are Disappearing

Let’s not sugarcoat this.

Between 2024 and 2025, production roles, healthcare support, grounds cleaning, and low-wage manufacturing occupations declined—driven by automation, new technology, and outsourcing. An agentic production cell that handles its own changeovers, quality inspection, and material routing eliminates specific human tasks:

- Manual machine operators who load recipes and adjust parameters

- Visual quality inspectors replaced by vision systems running at 90 fps

- Material handlers whose routes are now optimized and executed by AGVs

- First-line supervisors whose primary role was translating production schedules into floor actions

This is not hypothetical. This is happening now.

The Roles That Are Emerging

But the factory doesn’t empty out. It transforms.

Automation Supervisors: The human who oversees 5–10 agentic production cells, intervening only on exceptions the system escalates. This role requires understanding both the production process and the AI system’s decision logic. When a vision-system fault occurs, the supervisor decides whether to halt the line at $4,000 per minute or implement temporary manual inspection. That judgment call isn’t going away.

Data/Robotics Maintenance Engineers: The technician who doesn’t just replace bearings anymore—they retrain models, recalibrate vision systems, tune control parameters, and diagnose whether a quality deviation is a process issue or a model drift issue. Ford’s brownfield automation strategy explicitly emphasizes this hybrid skill set.

AI-Assisted Technicians: Manufacturers are discovering that AI can compress years of apprenticeship into months of AI-assisted learning. A novice technician with an AI copilot that surfaces relevant maintenance history, procedure steps, and diagnostic guidance can approach the effectiveness of a 10-year veteran in a fraction of the time.

The Skills Gap Is the Real Bottleneck

The technology exists. The economics work. The constraint is people.

More than one-third of manufacturing executives cite workforce skills as their top talent concern. The manufacturing workforce skews older than the national average. And the skills needed—data literacy, robotics programming, AI system management—aren’t what traditional manufacturing training programs teach.

Companies that invest in workforce transformation now will have a 3–5 year advantage over those that wait. Those that treat agentic AI as a pure cost-reduction play—cutting headcount without building new capabilities—will find themselves with technology they can’t operate and problems they can’t diagnose.

The Brownfield Reality

The Integration Debt Nobody Wants to Talk About

Here’s what the vendor demos don’t show you: most manufacturing runs on brownfield plants. Equipment installed in the 1990s. PLCs running proprietary protocols. Historians storing data in formats that predate JSON. MES systems customized beyond recognition by integrators who retired a decade ago.

Ford’s approach is instructive. They’re retrofitting existing lines with scalable robotics and in-house AI, emphasizing vendor-agnostic orchestration that normalizes telemetry from different equipment vendors. This is the hard, unglamorous work of making agentic AI function in reality rather than in a greenfield demo.

The integration challenges are concrete:

| Challenge | Impact | Mitigation |

|---|---|---|

| Legacy protocols (Modbus, Profibus, proprietary) | Cannot communicate with modern AI infrastructure | Protocol gateways and edge translators |

| Missing sensor data | AI models starved of inputs | Retrofit sensor packages (vibration, thermal, visual) |

| Inconsistent data formats | Training data unusable without extensive cleaning | Data normalization layers at the edge |

| Siloed systems (MES, ERP, QMS) | No unified production context for AI decisions | Integration middleware with API abstraction |

| Change management resistance | Operators bypass or distrust AI recommendations | Phased rollout with measurable wins first |

A greenfield smart factory can deploy agentic AI in 12–18 months. A brownfield retrofit takes 3–5 years to reach equivalent capability. Most of the world’s manufacturing capacity is brownfield. Plan accordingly.

The Energy Equation

One aspect of agentic production cells that doesn’t get enough attention: energy.

AI-driven process optimization can reduce industrial energy consumption by 15–20%. A framework called Sustain AI demonstrated an 18.75% reduction in energy consumption and 20% decrease in CO2 emissions through AI-driven scheduling optimization alone.

In an era where 67% of supply chain leaders identify ESG regulations as a top-five strategic driver, agentic AI isn’t just a productivity play—it’s a sustainability play. Systems that dynamically adjust production speed, batch sizes, and scheduling based on real-time energy pricing and carbon intensity create genuine environmental value while reducing costs.

This matters especially for European manufacturers facing high energy costs and aggressive decarbonization targets. An agentic production cell that optimizes for energy efficiency alongside throughput and quality can be the difference between a competitive factory and a shuttered one.

What to Do Now

For manufacturing leaders reading this, the playbook is straightforward even if the execution is hard:

1. Stop treating AI as a maintenance tool. Predictive maintenance was the gateway drug. The real value is in autonomous orchestration of entire production cells. If your AI roadmap ends at “fewer unplanned breakdowns,” you’re leaving 80% of the value on the table.

2. Start with changeovers. Dynamic changeover optimization is the highest-ROI application of agentic AI in discrete manufacturing. It’s measurable, it’s bounded, and it pays for itself in months, not years.

3. Invest in edge infrastructure. You cannot run agentic AI on a 2015 network architecture. Sub-10ms inference latency requires edge compute at the cell level. Budget for it.

4. Build the digital twin before you build the cell. Every agentic control policy should be validated in simulation before it touches physical equipment. This is not optional safety theater—it’s engineering discipline.

5. Solve the people problem first. Hire or train the automation supervisors and data-literate maintenance engineers you’ll need. The technology will wait. The talent won’t.

6. Document everything for the liability gap. Until standards and liability frameworks catch up, your event logs, rollback procedures, and human-in-the-loop protocols are your legal defense. Build them from day one.

The Bottom Line

The factory of 2030 will not be operated by humans staring at HMI screens and clicking “approve.” It will be operated by agentic systems that perceive, reason, and act—with humans governing policy, handling exceptions, and making the decisions that require judgment machines don’t yet have.

The manufacturers who build this capability now—starting with changeovers, investing in edge architecture, training their workforce, and honestly confronting the safety and liability gaps—will capture OEE improvements, energy savings, and competitive advantages that compound year over year.

The manufacturers who wait for the standards to be perfect, the liability frameworks to be clear, and the brownfield integration to be easy will wait forever. The technology is moving. The question is whether you’re moving with it.

Predictive maintenance was the warm-up. Agentic orchestration is the game.

It’s time to let the factory think.

Thorsten Meyer is an AI strategist who believes manufacturing’s AI revolution will be won on factory floors, not in conference keynotes. Follow his work at ThorstenMeyerAI.com

Sources:

- Dataiku: Manufacturing’s 2026 Mandate—From AI Pilot to Agentic Profit

- ManufacturingTomorrow: The Dawn of Agentic Autonomy—Defining the 2026 Smart Factory

- Deloitte: Agentic AI Strategy—Tech Trends 2026

- Deloitte: 2025 Smart Manufacturing and Operations Survey

- IDC: Charting the AI-Driven Future of Manufacturing

- Nature: Digital Twin Driven Smart Factories—Real-Time Physics-Based Co-Simulation

- Nature: LLM-Based Framework for Reconfigurable Production Line Changeover Task Planning

- ISO 10218-1:2025—Robotics Safety Requirements

- The Robot Report: ISO 10218 Industrial Robot Safety Standard Receives Major Overhaul

- Industry Today: 2026—The Rise of Agentic Smart Factories

- Automotive Manufacturing Solutions: Inside Ford’s Brownfield Automation Strategy

- Fortune: AI Will Infiltrate the Industrial Workforce in 2026

- Manufacturing Dive: 5 Manufacturing Trends to Watch in 2026

- MDPI Sustainability: Sustain AI—A Multi-Modal Deep Learning Framework for Carbon Footprint Reduction

- A3: Industrial AI in Action—Predictive Maintenance and Operational Efficiency at Scale

- TechAhead: The Rise of Edge AI in Manufacturing—Enterprise Trends for 2026

- RD World: 2026 AI Story—Inference at the Edge, Not Just Scale in the Cloud

- WEF: Why Large Language Models Are the Future of Manufacturing